When the New Orleans Times-Picayune announced it would cut back its printed editions to three days a week and shift to a digitally centered model based on free content, the response was immediate, loud, and, with few exceptions, hostile.

Some complained the paper and its parent, Advance Publications, was abandoning the large segment of its readership—mostly poorer and less well-educated—that relied on the printed product. Others lamented the staff cuts that accompanied the changes and the ham-fisted manner in which they were carried out. (For all the background, read Ryan Chittum’s deep dive from last spring.) But a unifying concern, underlying all the others, was for the quality of the news itself. The “digital-first” model—dependent on a high volume of new posts to drive ad-generating Internet traffic—would, many believed, result in a shoddier product.

Since the 2012 changeover, discussion about the Times-Picayune, now known as Nola.com, has been one long argument. Is the news content now worse: softer, more sensational, more trivial, less well-sourced? Or is it, as defenders argue, just different: faster, more immediate, livelier, more conversational, more engaging? Or what?

Now a class of undergraduates led by a Tulane communications professor has weighed in with study that seeks to settle the debate. Professor Vicki Mayer and the students of her Media Analysis class last fall completed what’s known in academic circles as a content analysis designed to compare the quality of the printed Times-Picayune of 2011 with the newspaper of 2013 as well as the allied digital platforms—website, tablet, and smartphone—now given primary emphasis and designed ultimately to supplant the paper altogether.

The results, while not the last word, are nonetheless troubling. The study provides solid support for the idea that digital platforms—even from the same news organization—require, or at least incentivize, softer, less-well-sourced, poorer quality news.

Nola, for its part, says the study’s methodology is hopelessly flawed and in no way accepts its conclusions, as we’ll see.

The class studied a month’s worth of news coverage three days a week—Sunday, Wednesday, and Friday—for four consecutive weeks in October 2011 and October 2013, about a year before and after the digital overhaul. The study catalogued staff-bylined stories appearing on A1 and B1 in print in both years and took screenshots of banner stories and those in the curated, upper part of the homepage of the Website (which can contain three to 10 stories, the study says), as well as those on the homepage of the mobile apps, tablet and phone. The screenshots were taken between midnight and 10am.

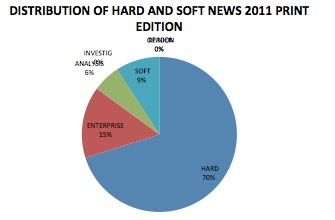

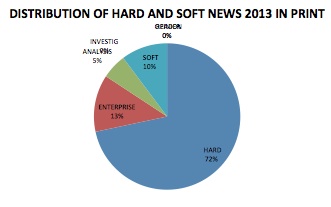

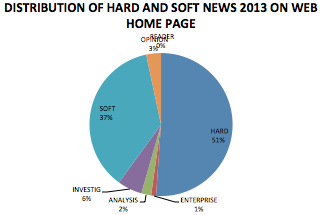

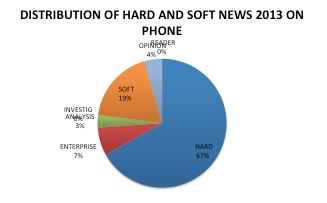

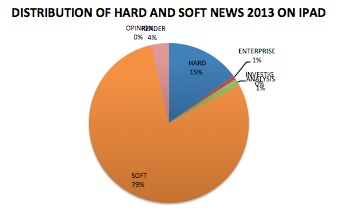

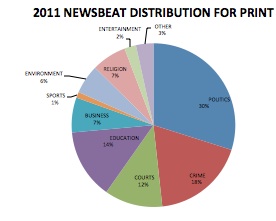

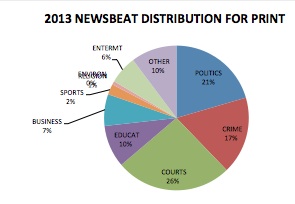

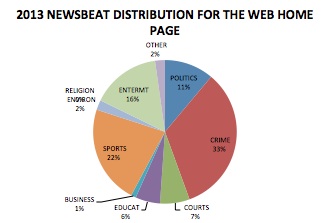

The two main categories for stories were by news beats (courts, cops, politics, sports, etc.) and by whether they represented “hard” vs. “soft” news. The latter is defined as:

…stories that were trivial, generally focused on entertainment and sports. In addition, we looked at the emergence of opinion columns and reader-response stories as two genres that have arisen on the homepages of digital formats. These pieces could be considered soft news, following James Fallows’ critique of opinion-based stories as easier and more speculative, rather than reporting-driven stories.

Importantly, the study did not track the digital platforms over a 24-hour period, which, as we’ll see, Nola believes to be a fatal flaw. I don’t agree.

I should disclose that I consulted in the design of the project. Mayer approached me (and others) last summer asking my thoughts on the idea of such an analysis, and we talked a couple of times and exchanged emails over what categories of stories the analysis should be looking for. Mayer sent me some preliminary data in October, but I didn’t have any further participation until I got the final results last month. This is the first time the results are being published.

Since this isn’t my data, I tried to vet it, including by sending it (with Mayer’s concurrence) to Jim Amoss, Nola’s top editor, who, to his credit, offered a detailed criticism of the study’s methodology, which I’ll quote at length. I say “to his credit” because I’ve made no secret of my opposition, both in principle and for its practical results, of the free-digital model, which Advance has been rolling out across the country, to devastating effect, in my opinion.

Still, there’s my opinion, and then there’s the Tulane data, and I’ll do my best to prevent the one from coloring my view of the other. I’ll also post the whole study so everyone can decide for themselves.

Here are the main findings:

—Overall, the 2013 news operation—predictably—produces a much higher quantity of content than did its 2011 predecessor, which had a much bigger staff.

—Importantly, the 2013 version of the printed Times-Picayune is not terribly different from its predecessor in terms of the type of stories covered.

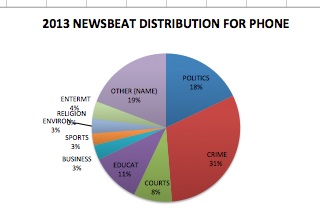

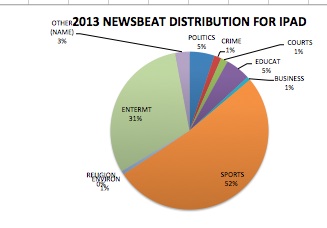

—News presented on digital platforms of Nola in 2013 was more likely to be about lighter subjects such as sports and entertainment, as opposed to politics, education, courts and other traditional core newspaper beats.

—2013 digital stories tended to be “softer.”

—Content on the less-curated phone and tablet apps, where story rotation is more automated, tended to be softer not just than print but also than the Web, where stories are more often hand-selected.

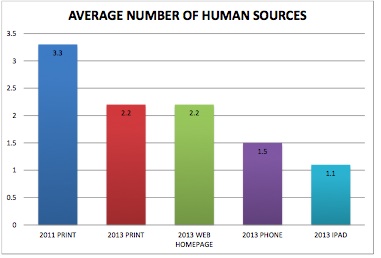

—Critically, stories appearing on digital platforms in 2013 contained fewer sources across all platforms, print and digital, than those in 2011.

Here are some graphics to illustrate the main points.

Hard vs. soft news:

2011 print:

2013 print:

Web:

Phone:

Tablet:

Beats:

2011 print:

2013 print:

Web:

Phone:

Tablet:

And here’s the all-important sourcing graphic:

The survey’s authors believe that the data show clear evidence of a lower quality product since the changeover, certainly on the digital platforms.

“The falling number of sources from 2011 (~3) to 2013 (~1 or 2) across platforms suggest as well that the stories were put together more quickly in 2013, with less reporting work put into each one,” the report says.

In an interview, Mayer says the study shows the new regime generally produces “a much softer, lower-quality product.” Either, she says, Nola “made a decision that people on digital don’t deserve the same kind of news that print readers do…or if the economics are based on clicks, you just put up whatever will get clicks.”

Amoss raises several objections in an email to me, which I’ll quote in its entirety:

Prof. Mayer’s students’ work represents a significant investment of time in studying The Times-Picayune and NOLA.com. We welcome their interest in us and invite them to meet with us. Their study illustrates the difficulties of analyzing a fluid website and comparing it to a printed product. We had several reactions to it:

—It’s not surprising to us that the students found little difference in comparing our print editions of 2011 with this year’s. News, then and now, whether breaking or enterprise, remains at the center of our work. Our reporters and photographers, the largest news-gathering operation in this region, remain focused on it.

—As for the web site, the study doesn’t fairly represent our work. Contrary to the graphic in the study, the journalistic content that flows through our responsively designed site over a 24-hour period is the same, whether it’s read on a tablet, a mobile device or a desktop computer.

—Using occasional snapshots of the Top Box modules of our homepage as a measurement of the proportions we devote to various kinds of content is a flawed methodology. It doesn’t take into account the ebb and flow of the news cycle over each 24-hour period and the variety of other journalism, including coverage of business, politics, crime, food and dining, music etc. that courses through the interior pages of the site. Focusing on the Top Box doesn’t begin to provide a statistically valid measure of “soft” versus “hard” news.

—Stories on the site are frequently reported incrementally. An early, quick dispatch about a trial or a city council meeting will necessarily have fewer sources than its full-fledged version at the end of the day. To conclude that we’ve generally lowered our standards for sourcing is a fallacy.

—Given that everything that appears in the printed Times-Picayune also appears at some point on NOLA.com, it seems odd to conclude that the printed edition remains essentially the same but that the site reflects “softer” content.

Though there’s considerable overlap between digital and print journalism, they SHOULD be different from each other in approach and delivery, in pace and intensity. A good news site’s homepage should be in constant flux. A good printed newspaper should offer depth, context and curation. We believe we must do both.

A couple of thoughts:

Amoss has a point that the study’s failure to track the digital platforms over a 24-hour period leaves open the possibility that the study missed “harder,” better-sourced stuff that may have appeared at times other than when students took their screen shots. That doesn’t meant the study’s findings are wrong, only that more work needs to be done.

Amoss also has a point that since the printed Times-Picayune is similar in both periods, and since all printed material flows through all digital platforms, those platforms do “at some point” include the “harder” and better-sourced material.

He’s also right to note that since the exact same content flows across all digital platforms, the fact that the tablet content, for instance, should register far “softer” than the Web is an anomaly that needs explaining.

Here, again, a study that tracks curation patterns over 24-hour periods, and measures the length of time stories are allowed remain on the home page, would be useful.

But I disagree with Amoss that it’s unfair to focus on the top box of a new site’s homepage and ignore the “interior pages of the site.” All content analyses have to restrict the scope of their research, and there is no better place to look for a news organization’s priorities than at its front page and its home page.

And while it’s true that all printed material (found here to be harder and better-sourced) flows across all digital platforms, it’s also true that the operation produces in total fewer full printed editions weekly than before. Instead of seven full papers, the operation produces three full papers, three tabloids, plus an early, bulldog edition of the Sunday paper.

Also, I understand that Nola stories are often reported incrementally, with sources added throughout the day, but I’m far from convinced that that practice alone would explain the sourcing discrepancy between the two periods, particularly since the 2013 print paper was found to have fewer sources than its 2011 counterpart.

Finally, I don’t think the snapshot approach is invalid at all. There is no special reason, for instance, that a snapshot approach would tend to collect “softer” and less-well sourced stories on digital platforms, and not “harder,” better-sourced stories. There is nothing in the methodology that would tend to produce such an outcome, and this is what the data show.

In the end, the print-to-print comparison on sourcing is, for me, telling: Stories in 2011 had more sources than those in 2013. Period. This has nothing to do with digital. And let’s remember, 2011 was far from the T-P’s heyday; Advance had already put the paper through severe cutbacks beforehand.

The study should be taken as a first attempt to compare news content before and after a self-described “digital-first” changeover. Amoss is right that the study also exposes the difficulties of trying to track the content of digital news operation, which is by definition more fluid, varied, and changeable. He is also correct that comparing print to digital outputs is problematic. One, as he says, should look different from the other.

Still, I find this to be a meaningful, if not definitive, comparison, of the news judgments of Nola.com operation versus that of its Times-Picayune predecessor. It reveals a sourcing drop-off between the two periods and suggests a more sensationalist, soft focus of the digital product.

In that way, it is an important start in understanding the production imperatives of digital news more generally.

Here is the full report, related graphs, and raw data:

Final Report of T-P Content Analysis by deanstarkman

T-P Aggregate Graphs by deanstarkman

T-P Study Raw Data by deanstarkman

Dean Starkman Dean Starkman runs The Audit, CJR’s business section, and is the author of The Watchdog That Didn’t Bark: The Financial Crisis and the Disappearance of Investigative Journalism (Columbia University Press, January 2014). Follow Dean on Twitter: @deanstarkman.