The nuclear crisis in Japan keeps on revealing how the news media struggle to report accurately and thoroughly about risk. The latest example is coverage of a new Union of Concerned Scientists (UCS) analysis, which estimates that radiation from Chernobyl will cause many more cancer deaths than the UN officially estimated.

To be fair, radiation risk is complex and controversial even among the experts. But this example of sloppy risk reporting isn’t about the challenges of covering a complex issue, nor is this the standard lament about breathless alarmism/sensationalism. This is about a far more common and far more troubling problem that pervades news coverage of risk. And it’s an easy one to fix.

Here’s the background: The World Health Organization estimates that the lifetime radiation-induced cancer death toll from Chernobyl will be about 4,000, out of the 600,000 people exposed to higher doses of radiation—about two thirds of one percent. Several anti-nuclear groups say the number is much higher. One of them, the UCS, says the WHO made a mistake by considering only the population that got higher doses, since the default assumption of most government radiation regulations is that the only safe dose of radiation is NO dose, so even a little radiation raises the risk of cancer somewhat. And since the radiation from Chernobyl spread around the entire globe, a fair consideration of the cancer threat needs to consider how much it raised the risk for everybody.

Based on how much of a dose people in various regions got, the UCS calculated that the total global radiation-induced cancer death toll from Chernobyl will be 27,000. That’s a lot more worrisome than 4,000—“6 times higher” than the WHO, the UCS notes, and certainly worthy of coverage, which it got from news organizations including the Los Angeles Times, Time magazine, The Australian (reporting that the UCS predicated a death toll of 50,000, for instance), and the BBC/PRI/WGBH radio program PRI’s the World.

They all reported on the new higher total death toll from Chernobyl. But that’s not enough. While the ‘absolute’ risk, the total number of victims, is important to help put any risk in perspective, it’s also important for readers/viewers/listeners to know the ‘relative’ risk, the percentage of expected victims out of the whole population, the odds that out of everybody, the risk can happen to any one person, which is also important for how big or small a risk seems. Probability is something readers/viewers/listeners want to know, and something any good news report about risk should tell them. This is part of Risk Reporting 101. But none of the coverage of the UCS analysis included that vital second number.

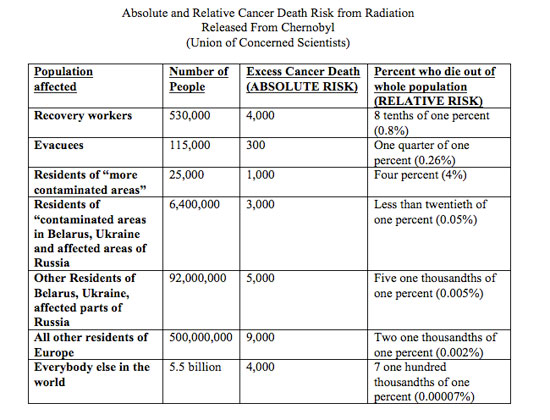

To make the importance of both absolute and relative risk clear, here’s a chart of what the UCS found, using their absolute numbers and adding the relative risk in the column on the right. (The UCS did not analyze relative risk, which is not surprising, since they are avowedly anti-nuke, and the relative risk for their various regional population subgroups is just as low, or in some cases far lower, than the WHO’s odds for the population they included…less than 1 percent.)

(The UCS total is 26,300. They rounded it up to 27,000 in their release)

It’s one thing if an advocacy group wants to use numbers selectively to strengthen their case. Fair enough. That’s what advocates do. But news reporting on risk is supposed to give news consumers all the information they need to make informed, healthy choices. Coverage of risk that fails to include both absolute and relative risk fails that basic test.

So why does this happen? Sometimes it’s simply because the bigger, scarier number makes for a better story. In April 2004, a Boston Globe story reported that the risk of dying on the job in Massachusetts rose 65 percent in the previous year. That’s the relative increase, and it sounds dramatic. The story, headlined “Mass. workplace deaths rose last year,” got great play, at the top of the front page of the Metro Section. But the story failed to note that the big percentage (relative) increase meant that instead of fifteen out of every million workers dying on the job, the absolute risk rose only to twenty-five in a million. Pretty small. Not as good a story.

The UCS analysis illustrates another big reason why both risk numbers aren’t included. Few journalists understand the difference, and fewer still realize the importance of including both. So they simply never ask, which makes them vulnerable to the spin of advocates who selectively play up whichever number strengthens their case, and play down or leave out the number that weakens their case, as the UCS press release did.

I know the journalists involved in coverage of the UCS analysis at PRI’s The World and they are competent, fair professionals for whom I have a great deal of respect. But when they interviewed the UCS scientist who did the analysis, they simply never asked about the relative risk number. I made the same mistake all the time back in my reporting days. I just didn’t understand this critical part of risk until I joined the Center for Risk Analysis at the Harvard School of Public Health.

Absolute and relative risk are part of the basic “Who, What, When, Where, Why, How” questions that any story about risk should answer, which most journalists don’t know to ask because few are trained in these specifics. I’ve written about many others in a previous post. I’d have been a better reporter had I known them back then. They’re offered here in the hopes of making journalism a little better, and helping the public make more informed intelligent choices about the risky world in which we live.

Potential conflict of interest disclaimer: I have consulted to several clients in the nuclear field about the need to communicate more openly and honestly; the International Atomic Energy Agency, the Swedish Nuclear Authority, the National Radiological Emergency Preparedness Association, and the Nuclear Energy Institute.

David Ropeik is an instructor in the Harvard University Extension School’s Environmental Management Program, author of How Risky Is it, Really? Why Our Fears Don’t Always Match the Facts, creator of the in-house newsroom training program “Media Coverage of Risk,” and a consultant in risk communication. He was an environment reporter in Boston for twenty-two years and a board member of the Society of Environmental Journalists for nine years.