Executive Summary

After decades of research and development, virtual reality appears to be on the cusp of mainstream adoption. For journalists, the combination of immersive video capture and dissemination via mobile VR players is particularly exciting. It promises to bring audiences closer to a story than any previous platform.

Two technological advances have enabled this opportunity: cameras that can record a scene in 360-degree, stereoscopic video and a new generation of headsets. This new phase of VR places the medium squarely into the tradition of documentary—a path defined by the emergence of still photography and advanced by better picture quality, color, film, and higher-definition video. Each of these innovations allowed audiences to more richly experience the lives of others. The authors of this report wish to explore whether virtual reality can take us farther still.

To answer this question, we assembled a team of VR experts, documentary journalists, and media scholars to conduct research-based experimentation.

The digital media production company Secret Location, a trailblazer in interactive storytelling and live-motion virtual reality, were the project’s production leads, building a prototype 360-degree, stereoscopic camera and spearheading an extensive post-production, development process. CEO James Milward and Creative Director Pietro Gagliano helmed the Secret Location team, which also included nearly a dozen technical experts.

PBS’s Frontline, in particular Executive Producer Raney Aronson-Rath, Managing Editor (digital) Sarah Moughty, and filmmaker Dan Edge, led the editorial process and enabled our virtual reality experiment as it was shot alongside an ongoing Frontline feature documentary.

The Tow Center for Digital Journalism facilitated the project. The center’s former research director and current assistant professor at UBC, Taylor Owen, and senior fellow Fergus Pitt embedded themselves within the entire editorial and production process, interviewing participants and working to position the experiment at the forefront of a wider conversation about changes in journalistic practice.

This report has four parts.

First, it traces the history of virtual reality, in both theory and practice. Fifty years of research and theory about virtual reality have produced two concepts which are at the core of journalistic virtual reality: immersion, or how enveloped a user is, and presence, or the perception of “being there.” Theorists identify a link between the two; greater levels of immersion lead to greater levels of presence. The authors’ hypothesis is that as the separation shrinks between audiences and news subjects, journalistic records gain new political and social power. Audiences become witnesses.

Second, we conducted a case study of one of the first documentaries produced for the medium: an ambitious project, shot on location in West Africa with innovative technology and a newly formed team. This documentary was a collaboration between Frontline, Secret Location, and the Tow Center for Digital Journalism. The authors have documented its planning, field production, post-production and distribution, observing the processes and recording the lessons, missteps, and end results.

Third, we draw a series of findings from the case study, which together document the opportunities and challenges we see emerging from this new technology. These findings are detailed in Chapter 4, but can be summarized as:

- Virtual reality represents a new narrative form, one for which technical and stylistic norms are in their infancy.

- The VR medium challenges core journalistic questions evolving from the fourth wall debate, such as “who is the journalist?” and “what does the journalist represent?”

- A combination of the limits of technology, narrative structure, and journalistic intent determine the degree of agency given to users in a VR experience.

- The technology requirements for producing live-motion virtual reality journalism are burdensome, non-synergistic, rapidly evolving, and expensive.

- At almost every stage of the process, virtual reality journalism is presented with tradeoffs that sit on a spectrum of time, cost, and quality.

- The production processes and tools are mostly immature, are not yet well integrated, or common; the whole process from capture through to viewing requires a wide range of specialist, professional skills.

- At this point in the medium’s development, producing a piece of virtual reality media requires a complete merger between the editorial and production processes.

- Adding interactivity and user navigation into a live-motion virtual reality environment is very helpful for journalistic output, and also very cumbersome.

- High-end, live motion virtual reality with added interactivity and CGI elements is very expensive and has a very long production cycle.

- This project’s form is not the only one possible for journalistic VR. Others, including immediate coverage, may be accessible, cheaper, and have journalistic value.

Finally, we make the following recommendations for journalists seeking to work in virtual reality:

- Journalists must choose a place on the spectrum of VR technology. Given current technology constraints, a piece of VR journalism can be of amazing quality, but with that comes the need for a team with extensive expertise and an expectation of long-turnaround—demands that require a large budget, as well as timeline flexibility. Or, it can be of lower-production quality, quicker turnaround, and thereby less costly. If producers choose to include extensive interactivity, with the very highest fidelity and technical features, they are limiting their audience size to those few with high-end headsets.

- Draw on narrative technique. Journalists making VR pieces should expect that storytelling techniques will remain powerful in this medium. The temptation when faced with a new medium, especially a highly technical one, is to concentrate on mastering the technology—often at the expense of conveying a compelling story. In the context of documentary VR, there appear to be two strategies for crafting narrative. The first is to have directed-action take place in front of the “surround” camera. The second is to adulterate the immersive video with extra elements, such as computer-generated graphics or extra video layers. The preexisting grammar of film is significantly altered; montages don’t exist in a recognizable way, while the functions of camera angles and frames change as well.

- The whole production team needs to understand the form, and what raw material the finished work will need, before production starts. In our case, a lack of raw material that could be used to tell the story made the production of this project more difficult and expensive. While the field crew went to Africa and recorded footage, that footage only portrayed locations. Although those locations were important, the 360-degree field footage—on its own—was missing anything resembling characters, context, or elements of a plot. Journalists intending to use immersive, live-action video as a main part of their finished work will need to come back from the field with footage that can be authored into a compelling story, in the VR form. It is very hard to imagine this task without the field crew’s understanding of the affordances, limitations, and characteristics of the medium.

- More research, development, and theoretical work are necessary, specifically around how best to conceive of the roles of journalists and users—and how to communicate that relationship to users. Virtual reality allows the user to feel present in the scene. Although that is a constructed experience, it is not yet clear how journalists should portray the relationship between themselves, the user, and the subjects of their work. The conclusions section lists many of the relevant questions and their implications. Journalists, theorists, and producers can and should review these ideas and start to develop answers.

- Journalists should aim to use production equipment that simplifies the workflow. Simpler equipment is likely to reduce production and post-production efforts, bringing down costs and widening the swath for the number of people who can produce VR. This will often include tradeoffs: In some cases simpler equipment will have reduced capability, for example cameras which shoot basic 360-degree video instead of 360-degree, stereoscopic video. Here, journalists will need to balance simplicity against other desirable characteristics.

- As VR production, authoring, and distribution technology is developed, the journalism industry must understand and articulate its requirements, and be prepared to act should it appear those needs aren’t being met. The virtual reality industry is quickly developing new technology, which is likely to rapidly reduce costs, give authors new capabilities, and reach users in new ways. However, unless the journalism industry articulates its distinct needs, and the value in meeting those needs, VR products will only properly serve other fields (such as gaming and productivity).

- The industry should explore (and share knowledge about) many different journalistic applications of VR, beyond highly produced documentaries. This project explored VR documentary in depth. However, just as long-form documentary is not the only worthwhile form of television journalism, the journalism industry may find value in fast-turnaround VR, live VR, VR data visualization, game-like VR, and many other forms.

- Choose teams that can work collaboratively. This is a complex medium, with few standards or shared assumptions about how to produce good work. In its current environment, most projects will involve a number of people with disparate backgrounds who need to share knowledge, exchange ideas, make missteps and correct them. Without good communication and collaboration abilities, that will be difficult.

At a time defined by rapid technological advances, it is our collective hope that this project can serve as the start of a thoughtful industry and scholarly conversation about how virtual reality journalism might evolve, and the wider implications of its adoption. In short, this project seeks to investigate what’s involved in making virtual reality journalism, to better understand the nonfiction storytelling potential of VR, to produce a good work of journalism that affords the audience with a new understanding of elements of the story, and to provide critical reflection on the potential of virtual reality for the practice of journalism.

What follows is our attempt to articulate a moment in the evolution of VR technology and to understand what it means for journalism—by creating a virtual reality film, as well as reflecting on its process, technical requirements, feasibility, and impact.

PDFs and printed copies of this report are available on the Tow Center’s Gitbook page.

Introduction

What Is VR?

Virtual reality (VR) is an immersive media experience that replicates either a real or imagined environment and allows users to interact with this world in ways that feel as if they are there. To create a virtual reality experience, two primary components are necessary. First, one must be able to produce a virtual world. This can either be through video capture—recording a real-world scene—or by building the environment in Computer Generated Imagery (CGI). Second, one needs a device with which users can immerse themselves in this virtual environment. These generally take the form of dedicated rooms or head-mounted displays.

Cumbersome, largely lab-based technologies for VR have been in use for decades and theorized about for even longer. But recent technological advances in 360-degree, 3D-video capture; computational capacity; and display technology have led to a new generation of consumer-based virtual reality production. Over the past three years, an ecosystem of companies and experimentation has emerged on both the content and dissemination sides of VR. This renaissance can be traced to the development of the Oculus Rift headset. Developed by Palmer Luckey, who was frustrated with the state of headset technology, the Oculus headset was first launched as a wildly popular Kickstarter campaign in 2012. Less than two years later, Facebook bought the company for $2 billion. Meanwhile, a wide range of other headsets has emerged (described below), and virtual reality is quickly becoming Silicon Valley’s next gold rush.

While devices have been evolving rapidly, so has the content for them. While the video game industry has driven the majority of content development, new camera technology also enables virtual reality experiences based on video-capturing real events. These new cameras are being placed at sporting events, music concerts, and even on helicopters and drones.

The authors of this report believe it is at this intersection of new headset technology and live-motion content production that there emerges a valuable, timely opportunity for examining what this all means for journalism.

So as to reflect on the challenges and potential of virtual reality within journalism, this project brought together a unique mix of participants: technologists who could build the prototype-camera technology and render a VR experience (Secret Location), a journalistic organization willing to experiment with driving its reporting and storytelling (Frontline), and a research group committed to studying, critically reflecting on, and contextualizing the entire process (the Tow Center for Digital Journalism).

What follows is our attempt to articulate a moment in the evolution of VR technology and to understand what it means for journalism.

Why Now?

Technophiles have followed virtual reality (VR) for a long time, but a number of recent technological advances have finally placed the medium on the cusp of mainstream adoption. Oculus has released its Rift headset to developers, while the company’s consumer headset, Crescent Bay, will reach customers in early 2016; Samsung has released Gear VR; Sony has a device in development; and Google has built a clandestine intervention called Cardboard (a simple VR player made of cardboard, Velcro, magnets, a rubber band, two biconvex lenses, and a smartphone). Together these advances, along with leaps in video technology, screen quality, and web-based distribution portals, have inspired a surge in media attention and developer interest.

We now have the computational power, screen resolution, and refresh rate to play VR with a small and inexpensive portable headset. VR is a commercial reality. We know that users already enjoy its capability to play video games, sit courtside at a basketball game, and view porn, but what about watching the news or a documentary? What is the potential for journalism in virtual reality?

Our goal with this project was to explore the extension of factual filmmaking onto this new platform, and to reflect on the implications of doing so. Is live-motion virtual reality a new frontier for documentary journalism, or will the expense, cumbersome production process, and limited distribution channels render it a medium unsuitable for the field?

Virtual reality is not new. A generation of media and technology researchers i have long used bulky prototype headsets and VR “caves” to experiment with virtual environments. Research focused mostly on how humans respond to virtual environments, especially when their minds are at least partially tricked into thinking they are somewhere else. Do we learn, care, empathize, and fear as we do in real life? This research is tremendously important as we enter a new VR age, out of the lab and into people’s homes.

In addition to the headsets, camera technology is set to transform the VR experience. While computer graphics and gaming dominated early content demonstrations for the Oculus Rift and similar devices, new, sometimes experimental cameras are able to capture live-motion, 360-degree and stereoscopic virtual reality footage.

While 360-degree cameras have been around for years, the new generation of systems is also stereoscopic, adding greater depth perception. This added dimension, along with the spatial and temporal resolution of current VR headset displays, can get users closer to what researchers call presence, or the feeling of being there.

These new cameras, feeding content to a new and exciting medium, open up a tremendous opportunity for journalists to immerse audiences within their reporting, and for users to experience journalism in powerful new ways.

We are all acutely aware that this emerging medium, while exciting, represents significant changes for the practice of journalism. Virtual reality presents a new technical and narrative form. It requires new cameras, new shooting and editing processes, new viewing infrastructure, new levels of interactivity, and can leverage distributed networks in new ways.

The medium itself raises important questions for the relationship and positionality of journalists and audiences. Virtual reality affords users increased control over what, in a scene, they pay attention to. The medium also supports interactive elements, although virtual reality is not the first medium to pull storytelling from its bound, linear form. This is potentially a far more fluid space, where the audience has a new (though still limited) kind of agency in how it experiences the story. This appears to change how journalists must construct their stories and their own places in it. It also changes how audiences engage with journalism, bringing them into stories in a visceral, experiential manner not possible in other mediums.

More conceptually, virtual reality journalism also offers a new window through which to study the relationship between consumers of media and the representation of subjects. Whereas newspapers, radio, television, and social media each brought us closer to being immersed in the experience of others, virtual reality has the potential to go even farther. A core question is whether virtual reality can provide similar feelings of empathy and compassion to real-life experiences. As will be discussed below, recent work has shown that virtual reality can create a feeling of “social presence”—the feeling that a user is really “there”—which can engender far greater empathy for the subject than in other media representations. Others have called this experience “co-presence” and are exploring how it can be used to bridge the distance between those experiencing human rights abuses and those in the position to assist them.[@humanrights] For journalists, the promise is that VR will offer audiences greater factual understanding of a topic. Could users walk away from a journalistic VR experience with more knowledge than they might get from watching a traditional film or reading a newspaper story?[@knowledge]

We also imagine that journalists may need to produce content for the VR medium simply to keep up with audience expectations. Generations that have grown up with rich media on interactive platforms may expect immersive, visceral experiences. Current audiences for news and documentary on linear TV skew older[@tvaudience], whereas more than 70 percent of U.S. teens play video games, according to Pew Research.[@pew] Pew also notes that young audiences are heavy users of interactive, visual media like Snapchat and Instagram (admittedly much less cumbersome platforms than VR headsets). The new storytelling method of the current era is virtual reality, and the media industry expects it to attract major audiences.

So, although the medium has decades of history, it is the recent surge of technical and social interest, its new relevance for journalism, the breadth of the communications puzzles, and the industry’s pressing need to keep innovating that have all come together to make this research opportune.

Report Outline

In order to explore the potential of VR for journalism, our project team gathered journalism scholars (Tow Center), virtual reality experts (Secret Location), and world-leading documentary filmmakers (Frontline).

CEO James Milward and Creative Director Pietro Gagliano helmed the Secret Location team, which included nearly a dozen technical experts. PBS’s Frontline, in particular Executive Producer Raney Aronson, Managing Editor (digital) Sarah Moughty, and filmmaker Dan Edge, led the editorial process and enabled our virtual reality experiment as it was shot alongside an ongoing feature documentary. The Tow Center for Digital Journalism facilitated the project. The center’s former research director and current assistant professor at UBC, Taylor Owen, and senior fellow Fergus Pitt embedded themselves within the editorial and production process, interviewing participants and working to position the experiment at the forefront of a wider conversation about changes in journalistic practice.

We begin this report by reviewing the history of virtual reality journalism, placing the current socio-technological moment in a context of decades of research and experimentation. We identify key concepts and theories we believe should be at the center of virtual reality journalism. We know that virtual reality has a rich history in forward-thinking research labs and is sparking interest in the fields of digital media and communications, but it is important to ground this study in the origins of its journalistic utility and how this history might shape our understanding of the opportunity and limitations

We then outline our own experiment with live-motion virtual reality journalism—a VR documentary on the Ebola outbreak in West Africa. Secret Location, a trailblazer in interactive storytelling and live-motion virtual reality, built a prototype, 360/3D camera and took the lead on extensive, post-production development processes.

Having outlined the background to this experiment, we provide an analysis of the case study, detailing both technical and journalistic opportunities and challenges. First, we explore the technical requirements for doing live-motion virtual reality. This is a nascent practice, and much equipment and expertise are required for each stage of production. The technology is evolving quickly, but it is still possible to divide this process into three identifiable stages, each with its own technologies and required skill sets.

- Capture via new camera and sound

- Post Production using a mix of image processing, motion graphics, CGI, and 3D-modeling software;

- Distribution via a spectrum of emerging headset technologies and their associated content stores.

Finally, for journalists, what does it mean to report in this new medium? We already know that producers need advanced equipment and technical skill sets, but the requirements for reporting and storytelling may also change. Does virtual reality challenge existing divisions between editorial and production in ways that push journalism in either problematic or beneficial directions? VR could also force us to rethink narrative form, bringing discussions about nonlinear storytelling and user agency into journalism.

This mixed-method approach, of simultaneously experimenting with and studying a new journalism technology, has provided notable benefits unavailable when theorizing from a distance. After being directly involved in all stages of the production process, we’ve accessed it in great detail and depth.

It is our collective hope that this project can serve as the start of a thoughtful industry and scholarly conversation about how virtual reality journalism might evolve, and the wider implications of its potential mainstream adoption. This project investigates what’s involved in making virtual reality journalism and provides a critical reflection on the potential of its practice in journalism.

VR as Journalism

Virtual reality has a long history in both the popular imagination and a wide range of scholarly experimentation and commercial development. In engaging with this literature, this report seeks to draw a line through these historical, theoretical, and technical discourses so as to tie the evolution of the form to the practice of journalism.

The Continuum of Visual Mediums

Virtual reality journalism can be seen as part of a continuum of visual mediums that have long influenced journalism, one that arguably shifts core concepts of representation and immersion. This continuum is best understood by looking at the evolution of how we learn about international events. From roughly the 1920s–1970s, the photograph was the dominant medium through which photojournalists disseminated images, aiming to educate the public about global events. While the photograph was initially hailed as a means to objectively convey information and provide a “true” account of events happening elsewhere, Susan Sontag’s Regarding the Pain of Others questioned the objectivity construct as it called attention to the ethical dilemma of consuming images of pain and suffering, as well as noted that there is always someone behind the camera deciding what to keep in its frame and what to exclude. Television reporting arguably overtook the dominance of the photograph in the mid-1970s, when TV began to displace more traditional forms of print media.[@photography]

In the 1990s, visual journalism evolved along two parallel, technological developments. Digital technology accelerated access to video, while computational technology enabled more interactive forms of media. Audiences began to witness news in a range of modes, including CD-ROMs, newsgames, websites, social and mobile platforms. This report does not discuss journalism within those particular mediums, because while these platforms support interactivity, they are vastly different from VR in that they are not necessarily immersive (a concept explained in more detail below).

As immersive journalism researchers Nonny de la Peña and others outline, there is a long history of attempts to place the journalism audience into the story.[@knowledge] Beginning with early accounts of video-based, foreign reporting, through to experimentations placing journalism into gaming environments and interactive media worlds, reporters have explored ways of giving their audiences both agency and higher degrees of presence in a story. For the most part, these efforts can be categorized as interactive journalism. De la Pena, et al., describes the bounds of this form:

The user enters a digitally represented world through a traditional computer interface. There is an element of choice, where the user can select actions among a set of possibilities, investigating different topics and aspects of the underlying news story. This offers both a method of navigation through a narrative, occasionally bringing the user to documents, photographs, or audiovisual footage of the actual story, and it also offers an experience.[@knowledge2]

While these approaches provide audience members with agency and some choice in how they experience a narrative, there are limitations around the ability to make news consumers feel like they are actually “there.”

Even still, our working hypothesis is that this evolution of visual media technologies has produced a parallel continuum of witnessing, with each advance bringing the viewer closer to the experience of others—from print photography; to television; to interactive, immersive and social digital media; and now live-motion virtual reality. Moving along the continuum, the consumer becomes an increasingly active participant in the experience of witnessing, as the barriers between self and the other begin to erode. What’s more, virtual reality offers the promise of further breaking the “fourth wall” of journalism, wherein those represented become individuals possessing agency, rather than what Liisa Malkki has referred to as “speechless emissaries.”[@emissaries] If this is the case, it will be because core concepts of virtual reality—such as immersion and presence—offer a qualitatively different media experience than other forms of visual representation.

The Foundations of Immersive Media Research

Specific journalism-focused research on computer-generated, immersive video and live-action, immersive virtual reality is nascent. Some early studies have considered the potential of “immersive journalism” in virtual reality,[@reality; @reality2; @reality3] and others have considered the necessarily changing nature of narrative form that will ensue in the VR space.[@space; @space2; @space3; @space4] Both draw extensively on earlier work on interactive, 2D media.

In order to broaden the baseline for a journalism-focused virtual reality discourse, it is valuable to draw upon the comparatively extensive scholarship about the implications of virtual reality on human-computer interaction and the process of visual representation. Scholars have already studied VR from the standpoint of human-computer interaction, seeking to better understand:

- the technology’s implications for self-perception;

- the technological factors that contribute to greater “presence,” exploring the interaction of users with CGI avatars;

- and the impact virtual reality has on social stereotypes and memory.

There’s also a significant amount of research from the field of health sciences, where virtual reality is used as a low-risk simulation tool for patients with eating disorders, chronic pain, and autism[@autism]. Researchers have used virtual reality to study social phenomena, such as interpersonal relations and emotions like empathy, as well.[@empathy]

Throughout all this literature, a central and thematic question emerges about how closely experiences in virtual reality replicate real senses, emotions, and memories. While much of the existing research certainly does not overlap with journalism or even communication studies, it is still necessary that new VR practitioners in journalism closely monitor its observations.

Indeed, the research has produced two virtual reality concepts of particular relevance to journalism. They are Immersion and Presence. Both, to varying degrees, seek to describe the feeling that one is experiencing an alternate reality by way of a virtual system. It is this feeling of experiencing the other that is critical to journalistic application.

Immersion

First, immersion is generally defined as the feeling that someone has left his or her immediate, physical world and entered into a virtual environment.[@environment] In the virtual reality field, this is achieved via a headset or spaces known as Cave Automatic Virtual Environments (CAVEs). A number of scholars have sought to define both the characteristics and technological requirements for achieving states of immersion. Seminal work by Witmer and Singer describes a feeling of being enveloped by, included in, or in interaction with a digital environment.[@environment2] They identify particular factors which promote immersion: the ease of interaction, image realism, duration of immersion, social factors within the immersion, internal factors unique to the user, and system factors such as equipment sophistication.[@environment] Others are more technologically deterministic, focusing on the specific technology requirements to achieve what is called a multimodal, sensory input. They say that if an experience excludes the outside world, addresses many senses with high fidelity, surrounds the user, and matches the user’s bodily movements, it will create a sense of physical reality and be highly immersive.17

The concept of immersion is widely used outside of virtual reality literature and is often applied to describe a wide range of digital journalism projects involving interactive 2D and gaming. In this sense, the degree of immersion can be interpreted on a spectrum ranging from scenarios in which the user is offered some agency and a first-person perspective, to the full VR experience wherein the user is embedded enough in the media to achieve a sense of altered reality.

Presence

Presence is often discussed in the context of immersive VR. Indeed, Witmer and Singer argue that there is a correlation between a greater feeling of immersion and a greater potential for feeling presence.[@environment2] The concept of presence is loosely defined as the feeling of “being there.” Its range of definitions all describe a state in which the user is taken somewhere else via technology, and truly feels transported. They also, however, are all but theoretical constructs, which place differing thresholds on the need for an absolute sense of detachment from physical reality. Kim and Biocca see presence as a combination between the “departure” from physical reality and the “arrival in a virtual environment.”[@environment3] Meanwhile, Lombard describes it as the moment when one is dis-intermediated from the technology that is creating the immersion.[@immersion] Zahorik and Jenison suggest that presence is achieved when one reacts to a virtual environment as he or she would react to the physical world.[@world]

The core value of virtual reality for journalism lies in this possibility for presence. Presence may engender an emotional connection to a story and place. It may also give audiences a greater understanding of stories when the spatial elements of a location are key to comprehending the reality of events. Nonny de la Peña, et al., identify a reaction when users respond to a media experience as if they are actually living through it, even though they know it is not real. They call this “Response As If Real” (RAIR). This is the primary, distinguishing characteristic between interactive and immersive media. A particularly powerful characteristic of the RAIR effect is, they say, the fact that it requires a very low level of fidelity. Even when the sophistication and fidelity of the technology is limited, users react to immersive experience in very real ways. This, in part, explains why relatively low-quality headsets (such as Google Cardboard) remain powerful despite their computational inferiority to more expensive systems. For de la Pena, et al., a combination of three variables determine the journalistic value of the RAIR effect: the representation of the place in which the experience is grounded, the feeling that events being experienced are real, and the transformation of the user’s positionality into a first-person participant.

It is worth noting that much of the early work studying immersive environments was conducted with computer-generated visuals, primarily in CAVEs, which allowed for manipulation by researchers; they do not necessarily replicate or truly mirror reality and generally relied on computer-generated avatars and scripted communication.

CAVE-based experiences derive from an entirely different generation of VR, and they operate very differently from the current wave of devices. Aside from the obvious differences between immersive media delivered into rooms versus head-mounted displays, advances in camera and post-production technology now make it possible to film live-motion VR much more readily. While this 360-degree video still undergoes significant computational post-production, we hypothesize that its effects on audiences will create a new category for research. As this kind of video starts its journey as light from the physical world, captured by a sensor, it attempts to mimic the human eye. The end result is as different from computer-generated environments as photography was from illustration. And so there remains a tremendous opportunity for a new phase of virtual reality research.

Frontline Case Study

Project Design

Looking to test both the technical practicalities and potential of VR journalism, we produced a virtual reality documentary on the Ebola outbreak in Sierra Leone, Guinea, and Liberia. Director Dan Edge was already producing a feature documentary for Frontline on the topic and agreed to take on the additional project. What follows is a detailed breakdown of the VR production experience.

Rationale

This research project comes at a time when virtual reality’s value for journalism is largely hypothetical. The industry desperately needs evidence of the platform’s benefits and information about the necessary skills, practices, and equipment.

The industry has only produced a few works of journalism in live-motion virtual reality (The New York Times’s “Walking New York,” the BBC’s Calais “Jungle,” Gannet’s “Harvest of Change,” and Vice News’s “Millions March” and “Waves of Grace”).22 The Associated Press, Gannet, Vice News, Fusion, and The New York Times have all made major commitments to developing journalism in virtual reality. The context and theories described in the preceding chapters suggest that immersive experiences can engender feelings of presence, producing higher levels of engagement (and perhaps empathy), and give audiences enhanced spatial understanding. However, without multiple examples, the industry is unable to make a judgment about whether the hypothesized value is real.

The next step in assessing VR’s potential within journalism is to understand what is involved in producing the work—a necessity for journalists who want to make virtual reality content, strategists who need to judge the viability of the medium for their own circumstances, and innovators who intend to improve the processes and outcomes.

This case study, we believe, contributes to the knowledge base on these high-level topics, illustrating how virtual reality can be valuable for journalism, as well as chronicling the processes and resources that are involved technically, conceptually, and editorially.

The writing below represents the observations of our core research team about the process of producing a piece of live-motion virtual reality journalism. Taylor Owen and Fergus Pitt had access to all stages of the editorial and development process, and to technical documents, communications between project teams, and project iterations at all points of evolution. The information in the case study therefore draws on the researchers’ observations of key workshops and meetings, structured interviews with team members during the production process, email exchanges, and reviews of technical documents. Raney Aronson-Rath and James Milward, along with their respective teams, both reviewed and contributed to the case study. Readers should note that the authors are not objective observers; Fergus Pitt and Taylor Owen contributed money from the Tow Center’s research fund and the Knight Foundation’s prototype fund that paid for camera equipment and some of Secret Location’s production effort.

Roles and Teams

Below we document the main players involved in producing virtual reality journalism. It omits a number of people, including some who temporarily substituted into roles, or who contributed back-office efforts. Also, this project leveraged elements of Frontline’s traditional television documentary resources, including considerable journalistic research and production planning. Those contributions are not included below. The full credit list for the production is available in the appendices of this report.

Raney Aronson-Rath, Executive Producer, Frontline—editorial development, story selection, commissioning, editorial oversight. James Milward, Executive Producer and Founder, Secret Location—project development, virtual reality domain knowledge. Dan Edge, Director and Producer, Frontline and Mongoose Production—360-degree video and sound recording, direction, scripting. Luke Van Osch, Project Manager, Secret Location—project management, VR technology documentation and training. Preeti Gandhi, Producer, Secret Location—creative production, project management, VR post production, technology-build supervision. Michael Kazanowski, Motionographer, Secret Location—360-degree camera and post-production technology development, 360-degree video stitching, immersive video authoring. Steve Miller, Motionographer, Secret Location—360-degree camera and post-production technology development. Taylor Owen, Assistant Professor of Digital Media and Global Affairs, University of British Columbia—project development, research, documentation, and report drafting. Fergus Pitt, Senior Research Fellow, Tow Center for Digital Journalism—project development, research, and documentation, and report drafting. Pietro Gagliano, Creative Director, Secret Location—digital concept direction. Andy Garcia, Art Director, Secret Location—motion graphics design and production. Sarah Moughty, Managing Editor of Digital, Frontline—editorial and production liaison.

Process

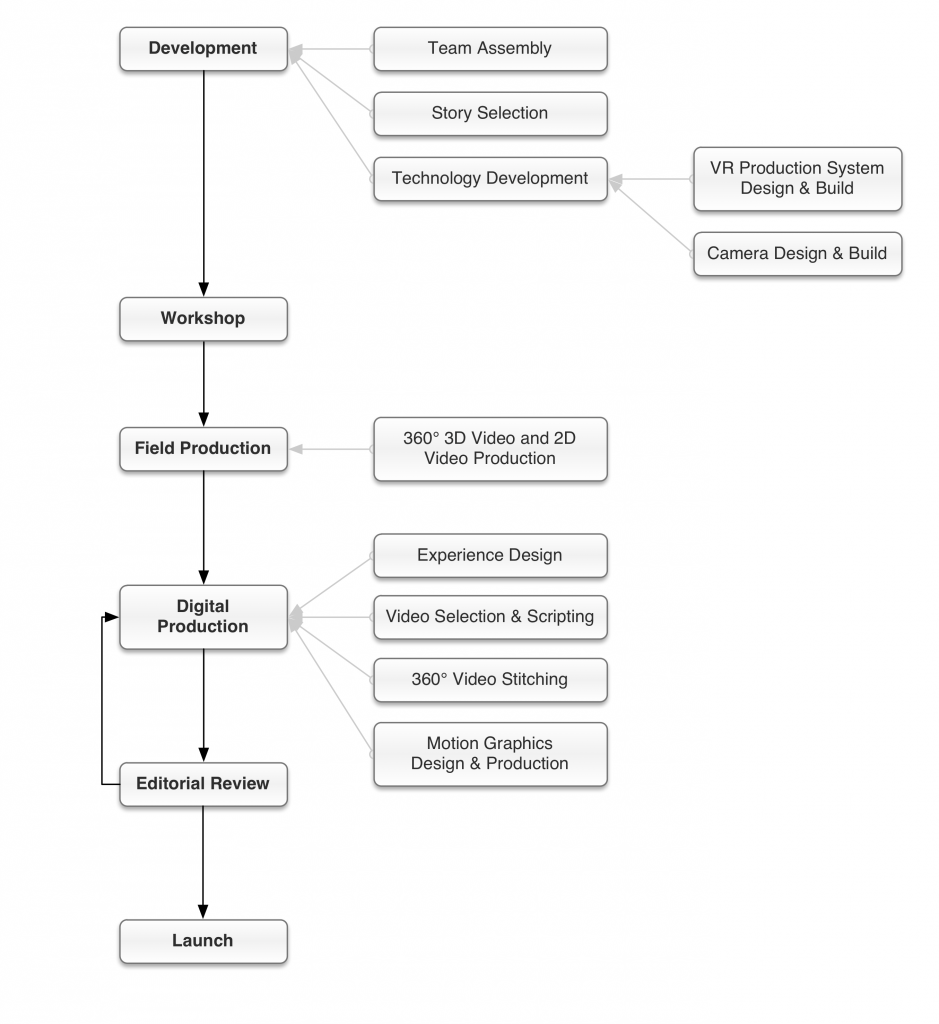

The project moved through stages of development, field production, digital-production, and distribution.

While the diagram below shows—at a high level—those stages, more importantly it shows the component tasks. It is simplified and focuses on the VR-specific processes. As such, it omits important aspects of journalistic reporting and research, and many business and operational support contributions without which any production would fail. As with most models, this one sacrifices precision for simplicity. In reality, stages overlapped; some steps were repeated, and the team went backwards and forward through the phases.

Pre-Production

Team Assembly

This production brought together teams from three groups: Frontline, Secret Location, and the Tow Center for Digital Journalism. Frontline, a prestigious documentary program on the PBS television network, brought long-form, television journalism expertise. Frontline funded the shooting and leveraged the “traditional” television documentary it was already producing. This virtual reality project was part of that program’s continuing push into digital and Internet platforms and products.

Secret Location, an award-winning interactive agency headquartered in Toronto, has been developing virtual reality work since January of 2014—seemingly a short time, but not in the context of this new field. The agency brought critical VR creative experience, its existing hardware and software tool-development, and its established VR workflows. It capitalized on previous experience with 3D game design and production necessary for VR but rare in journalism teams.

The Tow Center for Digital Journalism is a research and development center within the Columbia University Graduate School of Journalism. The Tow Center funded Secret Location’s work, and was part of the project’s fundraising and development process. The Tow Center brought research and analytical background, as well as media-project and team-management experience. For the Tow Center, this project contributes to continuing lines of research in new platforms and production practices for journalism.

Story Selection

During the second half of 2014, Frontline was producing a number of documentaries that were candidates for VR treatment. Each party involved in our project had a number of key criteria for a story that could take advantage of the medium’s attributes: It needed scenes and locations that were important to the audience’s understanding of the narrative. It also had to benefit significantly from a feeling of user presence. However, logistics factored in, too: The camera and microphone would be bulky, intrusive, and tricky to operate. The story’s director would have to be adaptable and willing to explore new technologies.

The team explored the potential of one Frontline production about end-of-life decisions. That project’s director had already gained access to and the trust of several families at Boston’s Brigham and Women’s Hospital and the Dana-Farber Cancer Institute. Despite the likely emotional power of being “in the room” and the potential to demystify a hidden experience around end-of-life interactions, we ultimately rejected the option because we couldn’t figure out an ethical way to be present with a bulky, ugly camera while maintaining the intimacy and sensitivity necessary for the director’s established fly-on-the-wall style of filmmaking.

An Ebola story, already in the early stages of production, became our favored option. Dan Edge, the director, was a filmmaker with experience incorporating innovative digital processes into his productions. The locations throughout West Africa were significant to the story and unfamiliar to many audiences. Nonetheless, the story still presented challenges. Secret Location would have preferred that a cameraperson with 360-degree video experience work alongside the director. That wasn’t possible. In fact, shooting conditions were extremely difficult all around and fast communication between those in the field and the experts in Toronto proved impossible.

Immersive Video Technology Development

Secret Location had already designed and built a camera rig for shooting the necessary 360-degree, stereoscopic video—having found that none of the commercially available equipment met its requirements.ii The agency had used the equipment to record VR video of a basketball game to promote BallUp, a reality TV show. For our project, the team designed and built a slightly updated version, including a 5.1-channel microphone to record surround sound. We also leveraged Secret Location’s existing 360-degree video post-production workflow.

The full production and post-production technology we used is detailed in the appendices of this report.

VR Training and Story Discussion

Once the camera was built and the topic chosen, key members of the production and post-production teams met in Secret Location’s Toronto offices. The agenda of the five-hour meeting was to train the director, Dan Edge, to use the camera; workshop the story; and begin to establish a common understanding of the VR platform and the film’s goals. This workshop was the first time Secret Location and Frontline staff met. The Frontline staff already had a well-formed understanding of traditional documentary and the facts of the Ebola outbreak, but discussion of the VR product was very basic and open-ended. At this point the team had only just started sketching possibilities for the VR experience’s overall structure. Some journalistic concepts were new to the Secret Location team, and director Dan Edge did not have any history working in VR.

Secret Location’s project manager and its camera designers explained the equipment’s operation and handed over four cases containing the cameras, sound gear, batteries, chargers, and stands.

This workshop was the only time prior to field production for face-to-face or online collaboration between the VR-experienced Secret Location team and the journalistically experienced Frontline team.

In the Field

Dan Edge worked as part of a two-person team, shooting 360-degree, stereoscopic video of three scenes in West Africa; two scenes were in a region around 17 miles northwest of the intersecting borders of Guinea, Sierra Leone, and Liberia. The third location was a field clinic in Macenta, 60 miles farther east in Guinea. Throughout this field shoot, Edge and his team primarily focused on collecting material for his traditional Frontline TV documentary.

His first location was at the foot of a tall kola nut tree in the jungle where a 2-year-old named Emile is thought to have come in contact with bats. This tree is the site where some scientists believe the boy contracted the Ebola virus, which killed him in December of 2013. The tree was quite close to Edge’s next location: the village of Meliandou where Emile lived, and the virus spread to his sister, mother, grandmother, and the rest of the victims who then passed it on to more than 27,000 people in neighboring Guinea, Sierra Leone, and Liberia.

At the kola nut tree, Edge filmed in two short sessions: the first batch of video was just two minutes, interrupted by the onset of a thunderstorm. The second kola nut tree shoot was around eight minutes long.

These shoots were the first time Edge used the prototype camera in the field. To understand the complexity of the filming process, it’s useful to realize that the camera rig was an improvised, immature combination of technologies. It used 12 GoPro cameras held in stereo pairs by a 3D-printed frame. GoPro cameras, while widely used in professional settings, lack certain “high-end” features. They have inconsistencies in their lenses, provide only limited control over color and light levels, and don’t have automatic synchronization or calibration features.

To produce stitchable footage, the field crew needed to provide a visual reference for calibration in post-production. Secret Location’s solution was to tie a piece of string to the top of the camera mount and hold it to the chest of a crewmember, who then walked in a circle around the camera. The string kept the crewmember at a consistent distance from the camera, helping the post-production team identify which pixels from one camera should line up with the next.

Edge’s first batch of footage collected at the kola nut tree suffered from a combination of nonprofessional equipment and difficult, highly variable lighting conditions. The 12 GoPros’ auto-exposure software fluctuated wildly, which made stitching the video files together very laborious.

The director’s experience in Meliandou was better. He described it as a “typical Guinean jungle village scene—kids playing, women cooking, men sitting.”

In the video capture, viewers can see children walking past, locals fidgeting, and smoke drifting upwards.

Sixty miles east, Edge also filmed at a French Red Cross Ebola clinic in Macenta, Guinea. He filmed for around 30 minutes, letting action unfold around the camera. Two figures in hazmat suits exchange black disposal bags; a figure walks a wheelbarrow past, and there are glimpses of patients between tent edges.

Overall, Edge reported a difficult experience:

It was a huge challenge to transport, maintain, and operate the equipment in the field without a technician or at least another pair of hands on the team. There was very little access to electricity in the jungle—so charging 12 cameras and a zoom, et cetera, was not straightforward. It was hot and difficult work. The odd-looking camera engendered suspicion in locals; some assumed it was a malevolent machine that would make villagers sick. I have a feeling exposure may be a real problem. Having looked through the files it seems to me that the sky is exposed differently in different shots, which I think may make stitching problematic. . . . I wouldn’t be that keen to shoot in the jungle again without a specific person whose job it is to manage the gear!

Crucially, Edge’s approach to shooting the 360-degree footage was to place the camera and let any action unfold around him. In his words, “Each scene places us at an important location/moment in the history of the outbreak—places most of us would never normally go to.” He didn’t shoot interviews or high-action scenes in 3D. This produced a challenge later in the project, which is discussed in the findings section of this report.

Digital Production

This grouping covers the work that was mostly completed in Secret Location’s Toronto offices, with editorial reviews from the Frontline team. This phase produced ideas about the structure of the VR experience; it also including stitching together video files from the field recording, producing the interactive motion graphics, and authoring the final VR product. It was a highly iterative process, with many steps repeated as the team agreed and refined its goals for the final product.

Structuring the Experience

Virtual reality can have a range of structures. It is a flexible, digital, interactive medium, which theoretically means authors can give audiences control over the order in which they view scenes, over content within a scene, or their viewing angle for a scene. However, the possibilities narrow when producers factor in playback equipment’s technical specifications and, most importantly, how to arrange narrative elements so that audiences will have a coherent, engaging experience.

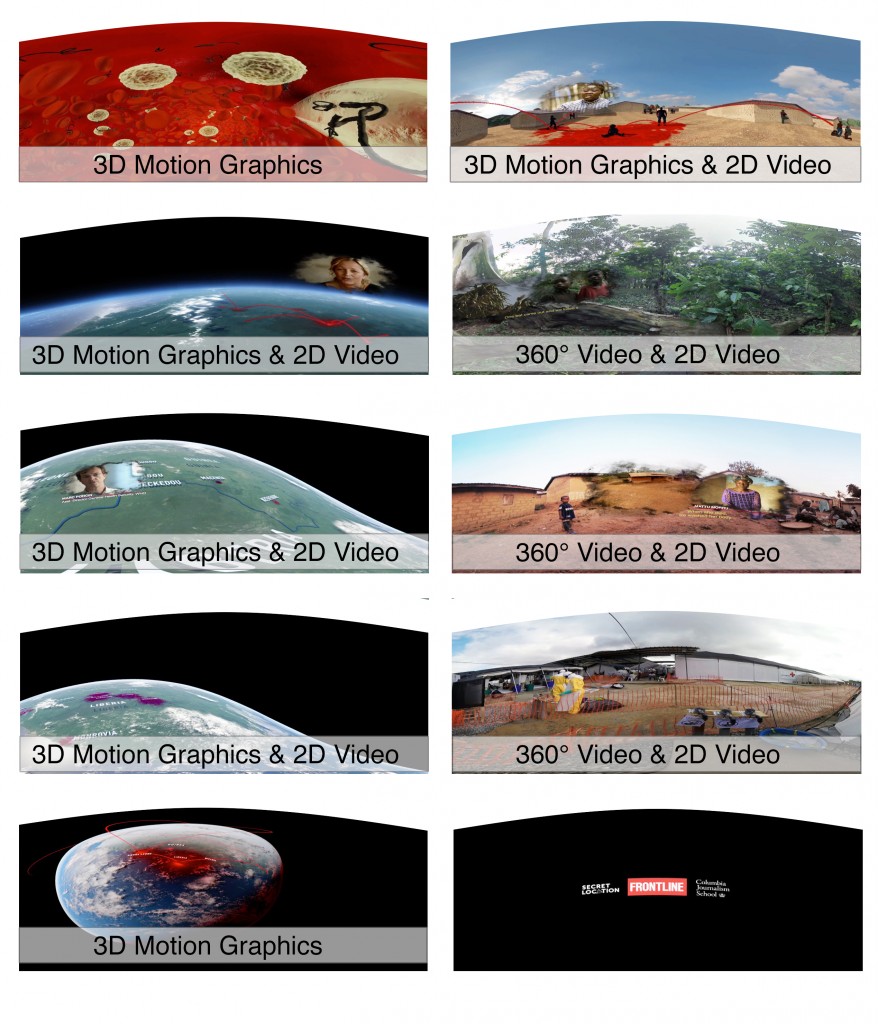

The producers eventually decided to give the Ebola virtual reality documentary a linear structure with ordered “chapters” based on the live-action, 360-degree footage; with a motion graphics introduction, interstitial scenes, and outro. The 360-degree, live-action video scenes also contain layered-in 2D video clips of key characters.

Through the conceptualization process, the team considered and discarded other structures, including a hub and spoke model, in which users could choose the order in which they viewed scenes.

The team members decided to use the linear sequence, primarily because they judged that providing a clear and coherent narrative was more important than giving the audience control over the sequence of scenes or an highly interactive experience.

That same emphasis on story informed the decision to layer 2D video into the 360-degree scenes. Without the 2D videos the scenes may have still provided the audience with a strong feeling of presence in locations that were important to the Ebola outbreak, but they would have had no focal point, been far less engaging, and related less information. This choice, its implications, and factors are explored in more detail in the findings section of this report.

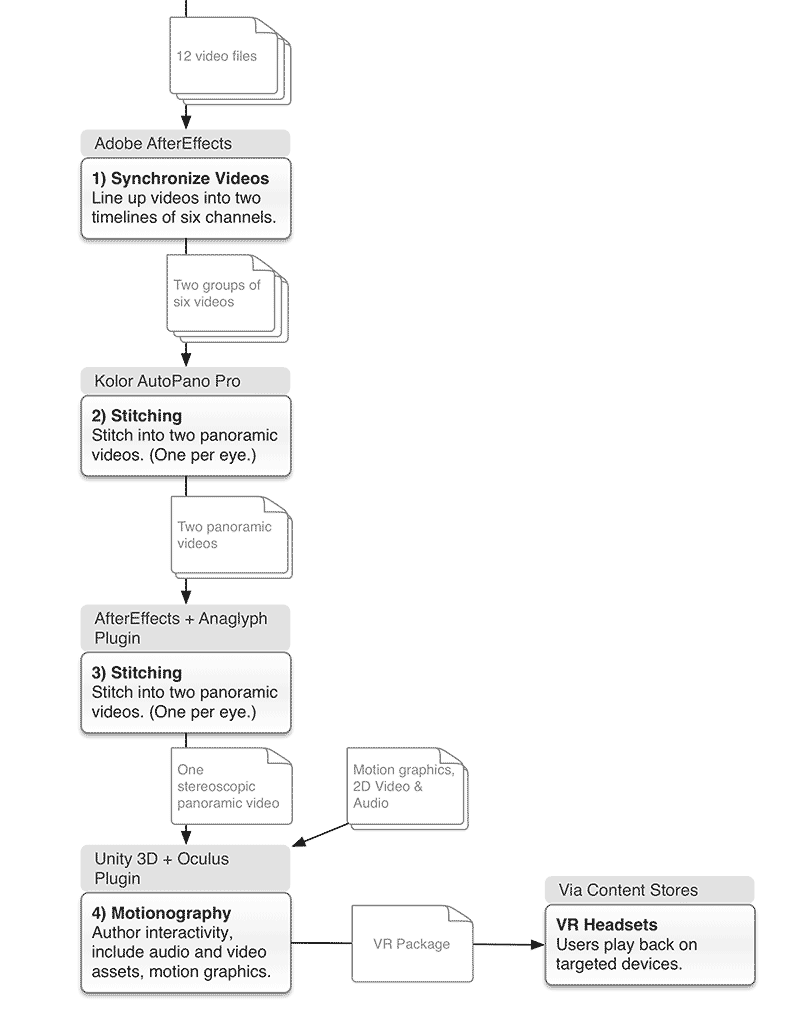

Stitching the 360-Degree Video

Stitching video is a time-consuming, highly technical, awkward task. In this case it involved taking the 12 individual video files and combining them into two spherical videos, one for each eye. Many producers hope the process will become far less onerous, and maybe even disappear as 360-degree, stereoscopic camera technology improves. Secret Location imported the stitched video files into a VR authoring tool (in this case, the Unity software suite because of its ability to integrate video) and combined them with motion graphics, 2D-video, and sound assets. We provide further detail about the process and equipment in an appendix.

Motion Graphics Production

In this project, motion graphics serve crucial narrative functions. They set the tone, frame the story, and convey the virus’s spread from the microscopic to an epidemic across West Africa. They place the immersive video chapters into necessary context. As such, the Secret Location creative and art directors produced multiple drafts for editorial review by Frontline’s journalists before their final renders. The team’s unusual willingness to share “edits” and respond to notes was vital to producing a quality product.

Computer-generated graphics also helped to capitalize on VR’s ability to put the user into a range of spaces at a range of scales—from a microscopic view alongside infected cells to an ultra-high angle viewing an entire virtual hemisphere.

The first scene in the experience is a motion graphics prologue, showing the virus inside a blood vessel, then—foreshadowing the later transition scenes—a photo-real, 3D model of the Guinean village. As the virtual camera zooms out, red arcs over a globe to indicate the virus’s infection path toward regional outbreaks; then Africa-wide ones. Edge sent the blood vessel sequences to a biologist who checked the accuracy of the virus and blood cell depictions. The placement and timing of the red paths were drawn from a map that Edge’s producer researched for the traditional Frontline documentary.

The subsequent motion graphics interstitials continue the approach of using red lines bleeding across an ever-widening view of the region and the world.

After Secret Location produced early sketches and drafted VR experiences, Edge spent five days in the Toronto office working alongside the motion graphics artists and art directors to polish the scenes for accuracy and narrative.

3D/2D Video Editing and Authoring

The live-action video scenes combine 2D video clips layered inside a 360-degree, stereoscopic video environment. These clips serve two main functions: They provide an unambiguous subject for the viewer’s attention, thereby avoiding confusion and disorientation. But perhaps most importantly, they impart much of the narrative by describing events, characters, and emotions.

The Secret Location team started by inserting placeholder clips, extracted from the traditional Frontline television documentary, to illustrate the technique and desired structure. Edge and Aronson-Rath gave paper edits, and drew on more of the footage that Edge’s field team had collected. Edge then worked with the Secret Location team to further refine the exact clips and their placement within the 360-degree, live-action scenes and motion graphics interstitials.

Dissemination

At the time of writing, the process of disseminating VR content to users is relatively complex: technically, strategically, and from the user-experience perspective. And the decision-making factors interrelate. There are multiple types of playback equipment and their different capabilities affect how the content works.

The vast majority of VR content is distributed through curated content stores, operated by the companies who also build the hardware, including Oculus (Facebook-owned), Samsung, and Google. The relationship between the companies only further complicates this landscape. Samsung had already incorporated Oculus’s technology, and during the life of our project the two moved into closer marketing associations for both hardware and content.

Our project teams’ professional relationships with the content partnerships staff of hardware companies produced marketing opportunities, such as the production of branded Google Cardboard headsets, and publicity through the hardware platforms’ channels to audiences. These potential benefits became factors when deciding which playback equipment to target.

The Playback Hardware

It’s useful to think of two categories of consumer-grade playback hardware: wired, high-fidelity and mobile. The high-fidelity class is primarily exemplified by the Oculus company’s Rift and Crescent Bay headsets. They are capable of very high video quality and a lot of interactivity, but must be tethered to a powerful external computer.

The mobile class contains more devices, but they share common design fundamentals. A smartphone provides the screen, computation, and most of the necessary motion sensing. It clips into a light-excluding headset with lenses between the user’s eyes and the smartphone. However, the mobile devices have far less computing power than the high-fidelity class, which forces VR producers to compress video and restrict some interactivity. Smartphone variability introduces further complexity; old versions of operating systems and lower-powered processors mean VR experiences may crash or not play at all. The most prominent companies in this group are Samsung (with its Gear VR product) and Google (with Cardboard).

So, the key tradeoff when considering hardware is between providing a very high-fidelity and stable experience on expensive, unwieldy equipment and a more variable, slightly lower-quality experience on cheaper, less cumbersome equipment.

Platform Reach, Content Stores, and Marketing

The producers considered the apparent marketing and promotion opportunities associated with the various companies’ content stores.

Google, marketing its Cardboard headset, provides partners with an option to produce branded headsets, designed to be given out at public events and sent to a mailing list. Google had been using its Play store to distribute VR content, though it subsequently rolled out VR support to its YouTube app for Android phones and announced intent to launch on iOS.

Oculus, owned by Facebook, provides its high-end devices as development kits and had announced a release date of spring of 2016 for its consumer product. The Rift and Crescent Bay product managers appeared to be primarily targeting the gaming market, emphasizing computer-generated graphics over immersive video. However, the Oculus brand was also being promoted within the content store used by the Samsung Gear VR device. That store included games and narrative experiences from a number of publishers.

We ultimately chose to develop the project for both Google Cardboard and Samsung Gear VR. This required significant development time, but it allowed for the documentary to reach a far greater audience via Cardboard, while also retaining higher resolution for the Gear VR.

Case Study Findings

Narrative Form

Virtual reality represents a new narrative form, one for which technical and stylistic norms are in their infancy.

The authors of this report have concluded that some fundamental components of narrative remain massively important to documentary storytelling, whether in traditional media or VR. These are primarily characters, actions, emotions, locations, and causality. They need to be present and understood by the audience for the work to produce a satisfying and compelling experience. Likewise, audience members benefit from being guided through the experience—directing their attention and exposing narrative elements at the right time to keep viewers engaged, without their getting bored by having too little to absorb or confused by too many story elements introduced with no organization. Few journalists would be unfamiliar with these ideas. However, VR presents new changes and challenges around delivering narrative elements.

Our research indicates that producers must exert directorial control in order to deliver those “satisfying and compelling” narrative elements mentioned above. While simply placing the camera in an interesting location can make the user feel present, this does not on its own produce an effective journalistic experience. However, many basic techniques available to traditional documentary directors present problems when working in VR. For example, directors cannot quickly cut between angles or scenes (a primary technique for shaping the audience experience in traditional video) without severely disorienting users. There is practically no 360-degree archive footage directors can draw upon. The camera cannot be panned or zoomed, and if a videographer wants to light a scene for narrative effect, the lighting equipment is difficult to hide.

So, we see two broad strategies for directors to exert control over how the narrative is incorporated into the experience. (These are not mutually exclusive.) The first is for the team in the field to direct the action around the camera. The second is to supplement the raw, immersive video by layering other content into a scene and/or including extra CG scenes before and/or after the live action scenes. In this project we called the CG scenes “interstitials” and they were crucial narrative and framing devices.

Regarding the first strategy of directing action in the field, traditional documentaries commonly feature “set pieces”—often interview formats, but sometimes coordinated action or even staged reenactments. Some documentary makers are uncomfortable with the staged action or reenactments for stylistic or ethical reasons. These preferences may need to be reexamined in the context of VR, where there are fewer available techniques for shaping narrative.

The Frontline Ebola documentary uses a lot of the second technique, supplementation. Scenes made entirely from computer-generated graphics introduce the story; set up the context of each immersive, live video scene; and close out the experience. The producers also layered 2D video clips into the 360-degree scenes. These 2D inlays can be valuable for adding background content and focusing a user’s attention on a specific element of the story. However, by supplementing the direct recording of the scene, some viewers may feel less immersed—as is predicted by the framework of immersive factors outlined in the theory section of this report (specifically, that model would say the producers’ decision to adulterate the raw footage reduced the experience’s fidelity). It would be valuable to conduct controlled audience research on this. The producers would have preferred that more 360-degree footage be available, so they could have used less supplementation.

Representation and Positionality

The VR medium challenges core journalistic questions evolving from the fourth wall debate, such as “who is the journalist?” and “what does the journalist represent?”

There are extensive journalistic debates about both the positionality of journalists and their audience, as well as what is being represented in the field of view of a journalistic work. Live-motion, 360/3D virtual reality environments complicate both.

Who, for example, is the user in a virtual reality environment? The journalist, in creating the point of view of the user, is making decisions about who the journalist will be, and who the user is supposed to be. Are users invisible bystanders in the scene? Do they inhabit the journalist’s position and role? Is the journalist (and her or his team) in the field of view? Is the journalist the guide to the experience? All of these editorial decisions will have significant impact on the resulting experience. Additionally, is the experience intended to be real, or surreal? Is the editorial goal to replicate reality, or to allow the user to do something that is not humanly possible (to fly, to look down on a conversation from above, to control the pace and evolution of a story)?

For this project, our team provided two perspectives. When users are situated in a live-motion, VR environment, they see the experience from a human, first-person perspective. They are in a place as a scene unfolds around them. For the transitions in between the three live-motion scenes, however, we used a surreal CGI perspective that allows users to watch the spread of disease over a map, which gave viewers a sense of the scale and pace of Ebola’s spread.

An additional editorial and production decision concerns how the construct of a scene is represented to a user. There is a conflict in the logic of virtual reality, namely that the experience is a highly mediated one. Everything in the scene is almost, by necessity, highly prescribed. At the same time, a principle selling point of the technology is that users feel like they are present in a real environment. The very real sense of immersion and users’ ability to control elements of the experience risk masking the editorial construct of journalism’s work. The users are not really there, and what they are seeing and experiencing (as in all works of journalism) is highly prescribed.

Agency

A combination of the limits of technology, narrative structure, and journalistic intent determine the degree of agency given to users in a VR experience.

Contemporary head-mounted VR can support user agency in terms of where a viewer chooses to look, and the content and interactions he or she triggers.

While the production examined in this case study granted users control over the direction in which they looked, scenes were designed to give a “best” experience when viewers were looking toward an anticipated “front.” The subjects of each scene, whether video clips or computer-generated objects, were in a single field of view.

However, the producers enforced a fairly linear content structure. Viewers experience a first scene, which delivers some information; the next scene delivers information that builds upon the previous scene, and so on throughout the experience. This was a conscious, deliberate decision on the part of the producers, primarily because they felt it served the topic and suited the available content. (An extra factor was that the reduced interactivity broadens the number and type of devices capable of playing the experience.) However, that will not necessarily be true of all VR journalism.

The producers’ belief that narrative elements were important to the success of the experience, and that authorial control was the best way to deliver those elements—especially in such a nascent and complex medium as VR—underpinned both of these decisions.

Challenging Tech Requirements

The technology requirements for producing live-motion virtual reality journalism are burdensome, non-synergistic, rapidly evolving, and expensive.

First, the capture technology is burdensome. This is primarily because the necessary cameras are either DIY, prototype, or very high-end and proprietary. This project used a DIY camera setup, which was challenging to operate and train our filmmaker to use. Having 12 separate cameras as part of the rig also introduced extra risks around equipment malfunction, storage, and battery life, particularly when shooting in challenging settings.

Second, the suite of cameras, editing software, and viewing devices necessary to produce a live-motion virtual reality product are non-synergistic. Virtual reality components are produced by a range of different companies, and often include experimental and DIY hacks. This means there is no common workflow or suite of products that integrate well, and the production demands a broad range of specialist, technological experts.

Third, the many technologies for creating and distributing live-motion virtual reality are rapidly evolving. During the course of this one project, we learned of new production cameras being developed and many new headsets—ranging from the sub-$10 Google Cardboard to the high-end Oculus consumer release. Google is developing a full-process, integrated suite including a new camera, auto-stitching program, and YouTube VR player. While this is exciting, the shifting landscape makes editorial and production planning very difficult. Technology and process decisions made early on can have a tangible effect on audience reach and relevance months down the line.

Finally, nearly every stage of the VR process is currently very expensive. This is particularly the case when working with CGI, which this project did. The high cost of the camera came from its hardware components, as well as compensation for the development team that designed, built, and refined the kit. Post-production requires a range of technical expertise for the stitching process, programming CGI components, and navigation. This particular expertise is in high demand, so hourly rates are commensurately elevated.

The technology and steps used in the Ebola documentary project (detailed in the appendices) will be useful as a starting point for other teams who want to produce documentary VR, especially those incorporating immersive, 360-degree, live-action video. However, any project starting now would likely benefit from more recently released equipment and newly developed techniques. That being said, some high-level points seem worth reviewing. It is helpful to divide this process into three stages: capture, digital and post-production, and dissemination.

Stage 1: Capture

First, the video capture. Using a camera rig of 12 GoPros introduced huge labor and logistical challenges in the field and in post-production. We were only able to produce a high-quality, immersive video under quite narrow circumstances.

Director Dan Edge reported difficulties in the field: The GoPros aren’t designed to work in synchronization, nor is it possible to coordinate exposure and color. The cameras require individual charging cables and it’s laborious to turn them on and off.

The size, appearance, and unwieldiness of the camera are in marked contrast to other modern recording devices, which can be flexible and unobtrusive. This reduces the types of locations where footage can be easily collected (e.g., some conflict settings or in places where discretion is paramount, like hospitals). These restrictions pose a significant challenge for documentary filmmaking, where access and actuality are key.

There is some hope on this front, however. Participants in the VR marketplace are developing more integrated and elegant cameras. Some keep the strategy of using many lenses in left-and-right pairs of “eyes.” (Paired lenses are necessary for stereoscopic footage, which can theoretically produce greater fidelity, and therefore immersion and presence.) Others are aiming for high-quality, 360-degree video, but with one lens per direction, abandoning stereoscopy in favor of simplicity, lower cost, and weight. It remains to be seen if either strategy will become the norm, or if both will remain viable—depending on the videographer’s context.

However, when this production started the team reluctantly judged that a custom-built, 12-camera rig was our only viable strategy. One alternative was to work with a camera-making company that could also perform the stitching and authoring, but excluded outsiders from that process. The production team, which viewed authoring as a particularly important journalistic process, could not envision removing itself from that step. This view has only gotten stronger. A second alternative, which has become more viable in the rapidly developing industry, would be to use a commercially released camera that produces 360-degree video, but not stereoscopic video. The question of whether stereoscopy is crucial to the effectiveness of the experience remains unanswered; however, the authors have spoken to teams planning current productions that have avoided it because of its high production overhead.

Stage 2: Digital and Post-Production

Second is the Digital and Post-Production process. This VR project required significant post-production effort working with newly combined technologies. The workflows and tools are documented in the appendices, and the authors believe that those sections will be particularly useful for future producers.

The camera strategy had implications for the post-production process. Recording HD video with 12 GoPro cameras produces many gigabytes of data. Organizing those files, moving them from the field to the studio, storing them—each of these steps becomes more onerous as the amount of video data increases. The process of stitching the video together, and making the visual quality consistent, is likewise laborious. At the moment, our production team could find no technology capable of doing the job without very large amounts of human effort to supplement the computational pass.

Working with 12 video frames for each stereoscopic, 360-degree scene required vast, specialized, human effort. This involved cost and time implications which logically challenge journalistic applications, reliant as they are on timely release to audiences.

Stage 3: Dissemination

Third is product Dissemination. The VR user base is currently relatively small and divided across a number of platforms. As detailed earlier, each platform has a unique set of strengths and weaknesses, such as the accessibility of the Google Cardboard versus the processing power of the Oculus Rift DK 2.

The producers weighed the strengths of each platform and chose to release the VR experience for the Samsung Gear VR and Google Cardboard devices. While these devices lack the raw processing power of Oculus’s DK 2, and advanced features like positional tracking (a camera that tracks the user’s head position in 3D space and reflects that positioning in VR), the Gear VR and Google Cardboard offer the greatest ease of use and accessibility to the growing VR audience.

The Gear VR and Google Cardboard head-mounted displays utilize individuals’ smartphones for VR playback rather than a high-end PC like the DK2. This creates a much larger potential audience than the DK2. They also require less setup and configuration, and aren’t tethered to a computer, making their use less arduous and intimidating to the average viewer. Knowing that the audience for our project would skew more toward the mainstream than the video, gamer-heavy demographics of the DK2, the producers felt it was of great importance that the VR equipment itself could get into as many hands as possible and be accessible to a relatively less tech-savvy audience.

The distribution networks for the Gear VR and Google Cardboard are more mature than that of the DK2. Google Cardboard apps are distributed through the existing iOS and Android app stores, and the recently launched Gear VR app store follows a standardized installation process and hardware requirements, unlike the Oculus Share store for DK2.

Finally, Gear VR and Google Cardboard enjoy one hardware advantage over the DK2, which is the higher-screen resolution on newer smartphones. Because the producers decided to focus on a more linear, narrative-driven experience, rather than high levels of interactivity and user input that require more processing power, the higher-resolution screen was a greater benefit than features that the DK2 could provide.

Tech Tradeoffs

At almost every stage of the process, virtual reality journalism is presented with tradeoffs that sit on a spectrum of time, cost, and quality.

Given the technological challenges outlined above, we believe it is most useful to view the production choices for live-motion virtual reality as a series of tradeoffs.

First is a choice between the long production time needed to produce full-feature VR versus lower-quality caption and production that meets the immediacy of many journalistic needs. Soon, we will likely be able to add live VR to this spectrum, but for the near future the feature set and quality will be worse than even current short-turnaround production capability.

Second, the high cost and expertise requirements of adding interactives, CGI, high video quality, and headsets limit the range of organizations and individuals that can produce it. Ultimately, there is a very limited production capacity in newsrooms for high-end VR.

Third, high-production value also diminished the reach of the product, as it is only playable on high-end headsets. Even if distributed on a large commercial scale, it will still be very expensive.

In short, given the current technology constraints, a piece of VR journalism can be high-quality, long-turnaround, require a production team with extensive expertise, and have a limited audience. Or, it can be of lower production quality, quicker turnaround, and reach a wider audience.

| Approach A | Approach B | |

|---|---|---|

| Production Quality | High | Lower |

| Turnaround time | Long | Shorter |

| Audience | Limited | Wider |

Wide Range of Skills Needed

The production processes and tools are mostly immature, are not yet well integrated, or common; the whole process from capture through to viewing requires a wide range of specialist, professional skills.