More than a decade after the US invasion of Iraq, the country’s violent death rates are still frighteningly high; more than 300 Iraqis were killed last week, according to London-based NGO Iraq Body Count. The causes range from ubiquitous IED explosions in Baghdad to mass executions in Mosul, ISIS’s de facto Iraqi capital.

But this number likely doesn’t tell the full story.

Anywhere from 135,000 Iraqi civilians to more than a million have perished since 2003, depending on the source you consult. In a study published in March, Physicians for Social Responsibility (PSR)—a group of doctors whose advocacy is informed by their medical training—argues for the higher end of this range. It also takes the mainstream press to task for failing to report higher casualty figures.

Correctly assessing the death toll is critical to recognizing the war’s legacy, as well as the sacrifice imposed on the population of a country that most everyone now agrees was invaded on flawed premises. A sanitized casualty count could also stunt the debate shaping public opinion and foreign policy in future conflicts.

“Unfortunately, the media often portray passively collected figures as the most realistic aggregate number of war casualties,” the PSR report notes. So-called “passively collected figures” come from tabulating external reports or other existing material—like news articles, local television reports, and statistics from morgues—as opposed to original, newly acquired data. Where civilian deaths in Iraq are concerned, passive collection is the method used by a source frequently cited in mainstream press accounts: the aforementioned Iraq Body Count “has basically established itself as the ‘standard,'” according to the PSR report.

Iraq Body Count (or IBC) was cited more than 1,100 times in newspapers, according to a LexisNexis search covering March 2003 to the present. The organization-cum-database is administered by a private company based in the United Kingdom. Investigative journalist Nafeez Ahmed has accused IBC of downplaying the war’s toll on Iraq’s people, noting that its two founders also co-directed Every Casualty, a program funded by the United States Institute of Peace, which Ahmed calls a “neocon front agency.”

Hamit Dardagan, one of IBC’s founders, insists the funding doesn’t compromise the group’s integrity. “IBC is a project that’s been around long before the USIP thing and has been recording casualties in exactly the same way, has been saying exactly the same thing,” he told CJR.

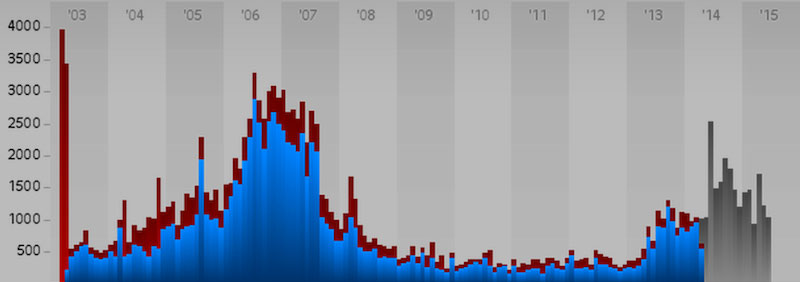

IBC data on total casualties (in red) alongside the subset of those killed by “unknown actors” (in blue) (Credit: IBT).

IBC’s website doesn’t feel like it’s trying to make the costs of war more palatable. The vertical bars are blood red, the whole graphic overshadowed by a stealth bomber dropping a payload of bombs—hardly the type of imagery one would use to minimize the collateral damage dealt by coalition forces.

But by virtue of being the go-to source for news desks looking to cite the latest numbers, IBC took up a disproportionately great part of the conversation on the war as it unfolded. Colin Kahl, now National Security Advisor to Vice President Joseph Biden, used IBC data in 2007 to argue that the Iraq war was a relatively mild one compared to America’s past campaigns, at least in terms of civilian casualties. Though Kahl criticized the war, he largely dismissed alternatives to IBC as posing “implausible implications given other available data.”

IBC’s numbers do skew low, according to some scientists and the organization itself, which acknowledged in 2004 that “our own total is certain to be an underestimate of the true position.” “Probably we’re recording around half or more of civilian deaths” in Iraq, Dardagan told CJR. Part of what’s flawed about IBC’s tally is its limited scope. The organization aggregates death reports from dozens of sources on a daily basis, according to Dardagan, and has consulted more than 200 since the beginning of the war. John Tirman, a political theorist at MIT, says IBC uses “mainly news media accounts, in other words, people who were named in a newspaper or television broadcast,” though even casualties that are simply listed, as opposed to being named, make it into IBC’s records, as do deaths reported in morgues. There are still many problems with this method, Tirman says, especially where dependence on media reports is concerned. Iraqi and western reporting is heavily centered on Baghdad, and often focuses on highly visible events like car bombings.*

“IBC is very good at covering the bombs that go off in markets,” said Patrick Ball, an analyst at the Human Rights Data Analysis Group who says his whole career is to study “people being killed.” But quiet assassinations and military skirmishes away from the capital often receive little or no media attention.

Even casualties reported internally by the US military didn’t always find their way into IBC’s tally. A few years after WikiLeaks published the Iraq War Logs in 2010—which IBC’s founders welcomed as information that should have been public all along—a study co-written by Columbia University epidemiologist Les Roberts estimated that just under half of the casualties there were also reported by IBC. The organization’s own sampling of the data, published a day after the War Logs were released, put the overlap at more than 80%.*

Either way, IBC is concerned with counting civilians killed by bullets, missiles and bombs, as opposed to those who died because of war’s devastating effects on the country’s infrastructure. “I think it’s very important for readers to understand that the implications of our war policies include not just people shot to death but also, for example, if we destroy a hospital or totally damage the infrastructure of a country, that there might be casualties from that as well,” says David Johnson, editor of Al Jazeera America’s opinion section. The Web site ran a piece on PSR’s study, which the author described as having “barely elicited a whisper in the media.”

Several studies have sought to estimate the hidden impact of the war by comparing death rates measured before and after the conflict. The idea is that whatever difference exists between observed deaths and those representing an increased death rate since the war began can safely be attributed to war’s effects, and labeled as “excess deaths.” In a 2004 Times op-ed, for instance, Nicholas Kristof wrote that insecurity made women “more likely to give birth at home, so babies and mothers are both more likely to die of “natural” causes.” But excess death estimates also aim to account for violent deaths that go otherwise unreported, not just cases of ambulances failing to reach the hospital in time because of bomb-cratered streets.

Conversely, “what IBC did is a roster,” says demographer Beth Daponte, in reference to IBC’s prerogative of counting only confirmed deaths. Daponte’s past work estimating casualties in the First Gulf War was enough to get her threatened with dismissal by the Department of Commerce, where she worked in 1992. “I think looking at excess deaths from conflict is an important thing to do. Certainly just having a roster is incomplete, and having a more nuanced understanding is important.”

Two “excess death” studies published in British medical journal The Lancet in 2004 and 2006 aimed to capture that nuance. The timing of the studies, which were published shortly before general and midterm elections in the US, respectively, was seen by some as a political move to lure voters away from the political establishment that had initiated and defended the war. In 2007, the BBC found that the British government had internally described the second Lancet study as “robust” and its methods “close to best practice.” Meanwhile, the Blair administration downplayed the findings in public: “There is considerable debate amongst the scientific community over the accuracy of the figures,” the government said in a statement.

IBC and Lancet partisans don’t agree on much, but both want to shift the focus to scientific methodology. For the Lancet studies, epidemiologists at Johns Hopkins used cluster sampling to get a random selection of neighborhoods across the country. They then went knocking on doors and asking questions to determine death rates. The 2006 study estimated that the war had caused roughly 654,965 excess deaths.

“Newspapers paid attention but also offered plenty of dissenting voices to counterbalance the surprisingly high number,” Gal Beckerman observed in CJR when the study came out. Beckerman defended cluster sampling as “how most surveys are conducted. It’s how exit polls are run. It’s also the method by which we’ve come to the figure 400,000 for the number of people killed in Darfur, a measurement that has allowed the media to call what is happening there a genocide.”

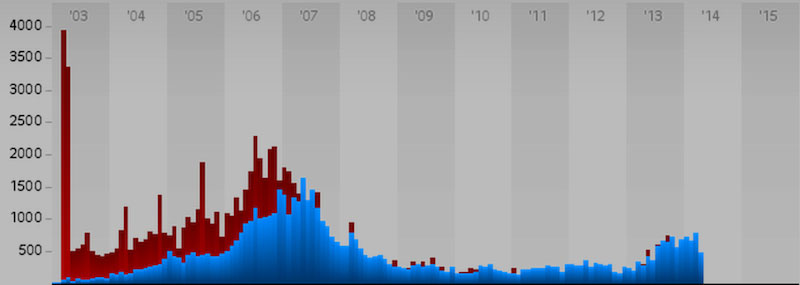

IBC data on casualties from incidents in which fewer than 5 or fewer individuals were killed (in blue) versus 5 or more (in red). (Credit: IBT)

A study published in PLOS Medicine in 2013, applying the same surveying methods used in the Lancet studies on a broader scale, estimated 461,000 excess deaths throughout the war from 2003 to 2011. More than 60 percent were found to be due to violence, more than a third of which came at the hands of coalition forces.

Amy Hagopian, an associate professor at the University of Washington School of Public Health and the PLOS study’s lead author, believes the gulf between the Lancet figure and her own (despite the bloody years between 2006 and 2011) comes down to the fact that many Iraqis had fled. “Having given this considerable thought, I am persuaded we were simply unable to account for the massive migration out of Iraq,” she wrote in an email to CJR. Many of the civilians who would have reported bleak numbers about deceased relatives had simply left the country; in an attempt to counter the effects of emigration, the PLOS study added 55,805 deaths to its estimate.

Like other experts, Hagopian believes that even solid sampling methodology fails to accurately count the dead. “I conclude our methods for counting mortality in war are just remarkably inadequate to the task,” she wrote.

Hagopian’s study was largely ignored by mainstream news media, she said. “Amazingly, The New York Times didn’t cover it at all, even though we actually cited one of their journalists and his response to one of our observations in our paper,” she says. “Not even the Seattle Times covered it, even as sort of a ‘local University of Washington faculty interest’ sort of a story.”

One reason Iraq Body Count is so frequently cited in the press could be its continuous updates on recent deaths and occasional articles about the trends behind the numbers (“The first half of 2015 has actually seen a greater number of civilian deaths compared to the second half of 201,” a post on the site reported yesterday). Massive sampling studies, on the other hand, put in months of work before publishing one-time reports. They’re discussed, they’re argued over, and they disappear.

But getting to the right numbers has always held appeal for journalists covering the war, according to the Los Angeles Times’ Monte Morin, who wrote about Hagopian’s 2013 study and reported from Iraq early in the war. Before the first Lancet study, finding the right number “was like the biggest assignment I had had in my career up until then, and it was something that they were really pushing us on, the top editors. And I know that other reporters were talking about it as well,” Morin told CJR.

“At the time I thought it was going to be just a matter of expending shoe leather and counting bodies,” he said. Switching to covering science in the summer of 2013 helped him better understand the strengths of Hagopian’s methods.

Some scientists think that’s what’s really needed: for journalists to simply learn more about statistics in order to better weigh the validity of new studies as they report on them. When asked about journalistic responsibility, Daponte explains that the press must “take each study and really look at what it does say and what it doesn’t say. This is where journalists really do need to have at least a rudimentary understanding of statistics and confidence intervals, and what sampling really means.” A confidence interval is the degree of certainty researchers attain that their estimates—in this case, of the death toll—fall within a certain range.

Tirman, who commissioned the second Lancet study and led its publicity push, agrees. “I thought that the reporters by and large handled this initial release [in 2006] pretty responsibly. But I think that over the whole period of the war and post-war it’s clear that most journalists who are discussing the level of mortality in Iraq never really took the time to understand the difference between the different methods,” Tirman said. “They just hadn’t done their homework. It’s not that hard to understand. I’m not a statistician.”

Patrick Ball, of the Human Rights Data Analysis Group, is also a harsh critic of journalists who fail to interrogate the conclusions lobbed to them based on data they hardly understand. “Journalists who are like that, they have no business on these beats,” Ball said. “They should be fired.”

*The starred paragraphs have been corrected. In the first case, an earlier version of the story failed to accurately reflect the number and kind of sources IBC consults. The number is considerably higher, and the sourcing more varied, than the original article reflected. The second starred paragraph has been updated to reflect that nearly half, as opposed to a quarter, of civilian casualties revealed in the Iraq War Logs had also been captured by IBC, according to a study co-written by Columbia University epidemiologist Les Roberts. We have also added a link to an IBC analysis indicating that more than 80% of civilian casualties in the Iraq War Logs had already been recorded by IBC.

Pierre Bienaimé is a journalist based in New York.