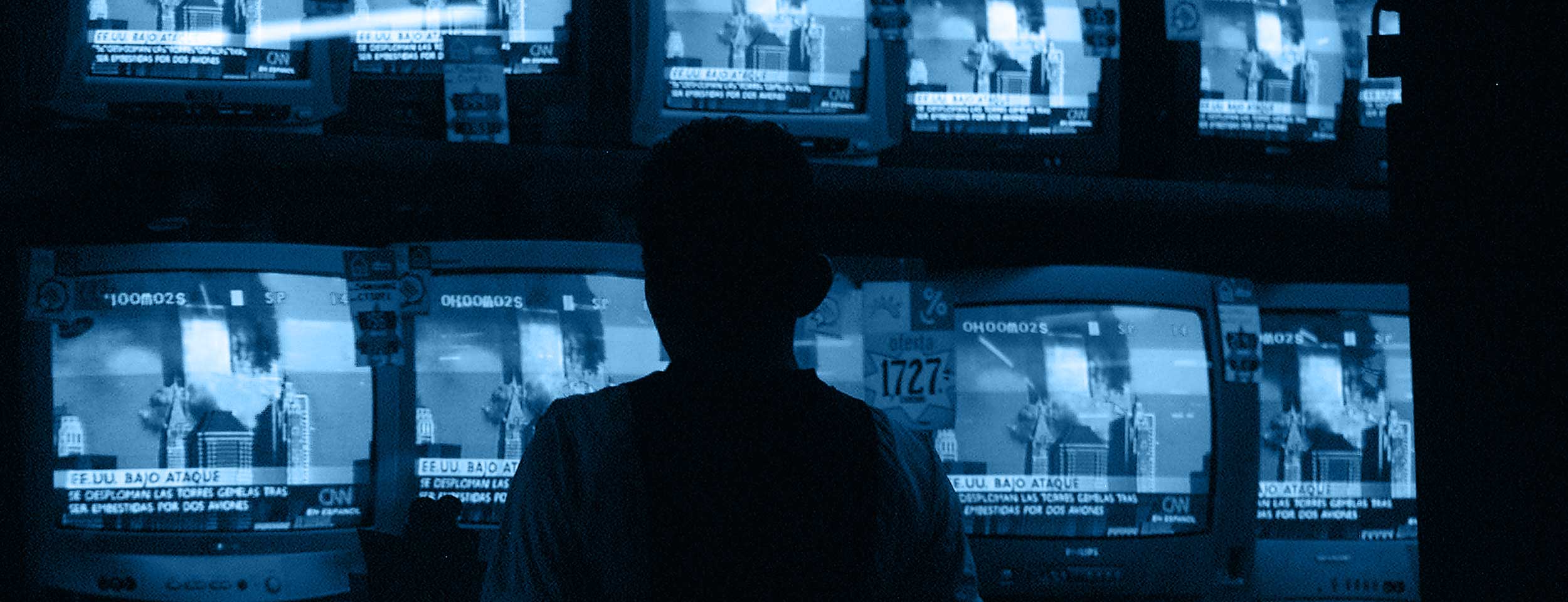

In 2013, two men murdered off-duty British soldier Lee Rigby in Woolwich, London.

As Rigby was being attacked in the street, bystanders were tweeting about it. One onlooker recorded a video of one of the attackers—with blood still on his hands—talking about why he had carried out the killing.

A research project by Britain’s Economics and Social Research Council that looked at the Woolwich incident concludes that social media is increasingly the place where this kind of news breaks, with important implications for first responders. Social media is now a key driver of public understanding, the report finds, with implications for government and security officials as well as social media platforms, which must consider their role in mediating public reaction to avoid negative outcomes, both in terms of further incidents and community relations.

The platforms insist that they are not publishers, let alone journalistic organizations. Their business is built upon providing an easy-access, open channel for the public to communicate. The terms and conditions of use, however, allow them to remove content, including shutting off live video. This is now done according to a set of criteria enforced through a combination of users flagging offensive content, automated systems that identify key words, and decisions by platform employees to remove or block content or to put up a warning.

This sort of post-publication filtering is not the same process a journalist undertakes when selecting material pre-publication. However, it is editing. It involves calculating harm and making judgments about taste. Beyond being news sources, platform companies are increasingly shaping the architecture of how news is delivered online, even by publishers themselves. Journalists have lost control over the dissemination of their work. This is a crucial challenge for the news media overall, but the issue is especially acute when it comes to reporting on terror.

The platforms provide an unprecedented resource for the public to upload, access, and share information and commentary around terror events. This is a huge opportunity for journalists to connect with a wider public, but it also raises key questions: Are social media platforms now becoming journalists and publishers by default, if not by design? How should news organizations respond to the increasing influence of platforms around terror events?

Facebook is becoming dominant in the mediation of information for the public, which raises all sorts of concerns about monetization, influence, and control over how narratives around terrorist incidents are shaped. It is in the public interest for these platforms to give people the best of news coverage at critical periods. But will that happen?

These are pressing policy problems. News is a good way of getting people to come to these platforms, but it is a relatively minor part of their business. How the platforms deal with this in regard to terrorism is an extreme case of a wider problem, but it brings the issues into sharp focus and reminds us of what is at stake.

Many believe there is a technical fix, but in practice, the volume and diversity of material (40 hours of video are uploaded every minute to YouTube) make it impossible to automate a perfect system to instantly police content.

For mainstream media, the Rigby killing was a test case in how to handle user-generated content in a breaking news situation involving terrorism. “This was very graphic and disturbing content,” said Richard Caseby, managing editor of The Sun. “Would it only serve as propaganda fueling further outrages? These are difficult moral dilemmas played out against tight deadlines, intense competition, and a desire to be respectful to the dead and their loved ones.”

The video first appeared in full on YouTube. News channels such as Sky carried the footage of attacker Michael Adabalajo wielding a machete and ranting at onlookers. ITN obtained exclusive rights to run it on the early evening bulletin, just hours after the incident and before 9 pm, known in the UK as the “watershed,” after which broadcasters are permitted to air adult content. Those reports, unlike the YouTube footage circulated on social media, were edited, contextualized, and accompanied by warnings. But there were still more than 700 complaints from the public to the UK’s broadcasting regulator, Ofcom, about the various broadcasts, including on radio. Ofcom cleared the broadcasters and said their use of the material was justified, although it did have concerns about whether the broadcaster has given the viewers sufficient “health warnings” about the shocking nature of the content and restated the importance of its guidelines.

For the social media platforms, the incident raised two issues. First, it was through the platforms that the news broke, posing questions about their responsibility for content uploaded to their networks. Second, the incident surfaced a problem with the platforms’ reporting of users who post inflammatory material.

This second issue emerged during the trial of the second attacker, Michael Adebowale. Adebowale had posted plans for violence on Facebook and the company’s automated monitoring system had closed some of his accounts, but this information was not forwarded to the security services. Facebook was accused of irresponsibility. “If companies know that terrorist acts are being plotted, they have a moral responsibility to act,” then-UK Prime Minister David Cameron said at the time. “I cannot think of any reason why they would not tell the authorities.

Facebook’s standard response is that it does not comment on individual accounts, but that it does act to remove content that could support terrorism. Like all platforms, it argues that it cannot compromise its users’ privacy.

The three main platforms–Facebook, Twitter, and YouTube–all have broadly similar approaches to dealing with content curation during a terror event. All have codes that make it clear they do not accept content that promotes terrorism, celebrates extremist violence, or promotes hate speech. “We are horrified by the atrocities perpetrated by extremist groups,” Twitter’s policy statement notes. “We condemn the use of Twitter to promote terrorism and the Twitter Rules make it clear that this type of behavior, or any violent threat, is not permitted on our service.”

Twitter has taken down over 125,000 accounts since 2015, mainly because of suspected links to ISIS. It has increased its moderation teams and use of automated technology, such as spam-fighting bots to improve monitoring. It collaborates with intelligence agencies and has begun a proactive program of outreach to organizations such as the Institute for Strategic Dialogue, a public diplomacy NGO, to support online counter-extremist activities.

These platforms are in a different situation from news organizations. They are open platforms dealing with a vast amount of content that can only be filtered post-publication, and are still developing systems to edit users in real time. “There is no ‘magic algorithm’ for identifying terrorist content on the internet, so global online platforms are forced to make challenging judgment calls based on very limited information and guidance,” Twitter notes. “In spite of these challenges, we will continue to aggressively enforce our Rules in this area, and engage with authorities and other relevant organizations to find solutions to this critical issue and promote powerful counter-speech narratives.

Many believe there is a technical fix, but in practice, the volume and diversity of material (40 hours of video are uploaded every minute to YouTube) make it impossible to automate a perfect system to instantly police content. Artificial intelligence and machine learning can augment a system of community alerts. But even if a piece of content is noted, a value judgment must be made about its status and what action to take. Should the material be removed, or a warning added?

This puts the platforms in a bind. YouTube, for instance, wants to hang onto its status as a safe harbor for material that might not be published elsewhere. When video of the results of alleged chemical weapons attacks on rebels in Syria was uploaded, much of it by combatants, YouTube had to make a judgment about its graphic nature and impact and decide how authentic or propagandistic it was. YouTube says it generally makes such determinations on a case-by-case basis. Once this footage was posted, many mainstream news organizations were able to use it in their own reporting.

Live streaming

The problem of balancing audience protection, security considerations, and social responsibility with privacy and free speech becomes even more acute with the arrival of new tools such as live video streaming from Facebook Live, Twitter Periscope, YouTube, and even Snapchat and Instagram. These services afford ordinary citizens the opportunity to broadcast live. Many people welcome this power as an example of the opening up of media. But what happens when a terrorist like Larossi Aballa uses Facebook Live to broadcast himself, after murdering a French policeman and his wife, holding their 3-year-old child hostage, broadcasting threats, and promoting ISIS? The Rigby killers relied on witnesses to broadcast their statements and behavior after the attack, but Aballa was live and in control of his own feed. That material was reused by news media, albeit edited and contextualized.

There is a case for allowing virtually unfettered access that gives the citizen a direct and immediate unfiltered voice. Diamond Reynolds filmed the shooting of her boyfriend Philando Castile by a police officer in St. Paul, Minnesota, live on Facebook. The video was watched by millions, shared across social media as well as re-broadcast on news channels and websites. It attracted attention partly because it was the latest in a series of incidents in which black people were subject to alleged police brutality. In this case, Facebook Live made a systematic injustice visible through the platform’s rapid reach. Local police contested Reynolds’s version of events, but the live broadcast and the rapid spread of the video meant that her narrative had a powerful impact on public perception. It was contextualized to varying degrees when re-used by news organizations, but the narrative was driven to a large extent by Reynolds and her supporters.

News organizations need to consider how to report these broadcasts and what to do with the material. Research shows varying approaches to dealing with this kind of graphic footage, even when it’s not related to terrorism. Should news organizations include direct access to live video as part of their coverage, as they might from an affiliate or a video news agency? In principle, most news organizations would say they resist becoming an unfiltered platform for live video broadcasts by anyone, with no editorial control.

As Emily Bell, director of the Tow Center for Digital Journalism at Columbia Journalism School, points out, this reflects a difference between news organizations and digital platforms:

When asking news journalists and executives “if you could develop something which let anyone live stream video onto your platform or website, would you?”, the answer after some thought was nearly always “no.” For many publishers the risk of even leaving unmoderated comments on a website was great enough[;] the idea of the world self-reporting under your brand remains anathema. And the platform companies are beginning to understand why.

Media organizations are having to negotiate with the platforms about how to inhabit the same space when these dilemmas arise. Sometimes, they have to act unilaterally. For example, CNN has turned off auto play for video on its own Facebook pages around some terror events, and routinely posts warnings on potentially disturbing content.

How far should platforms intervene or become more like media organizations?

The platforms are in a difficult place in terms of the competing pressures of corporate self-interest, the demands of their consumers for open access, the public interest involved in supporting good journalism, and the goal of fostering secure and cohesive societies. They are relatively young organizations that have grown quickly and are still accreting institutional knowledge on these issues.

The platforms have accepted that they have a public policy role in combatting terrorism. Facebook now has a head of policy for counterterrorism, and it has gone further than most Western news media organizations in allowing itself to be co-opted into counterterrorism initiatives. Yet any intervention raises questions. For example, Facebook offered free advertising for accounts that post anti-extremist content. But which ones, and how far should it go?

Additionally, the platforms provide an opportunity for building social solidarity in the wake of terror incidents, far beyond the ability of news media. After the Lee Rigby killing, there was widespread reaction on social media—including from Muslims—expressing shock and disgust at the attacks. There were also positive social media initiatives that sought to pay respect to the victim. But some reaction was incendiary and anti-Islamic, and some people faced charges for inciting racial hatred on social media.

British MPs recently criticized the platforms for not doing enough to counter ISIS. However, the platforms say they are already doing much to remove incendiary content. “The vast majority of ISIS recruits that have gone to Syria from Britain and other European countries have been recruited via peer to peer interaction, not through the internet alone,” notes radicalization expert Peter Neumann, a professor of security studies at London’s King’s College. “Blaming Facebook, Google, or Twitter for this phenomenon is quite simplistic, and I’d even say misleading.”

Ultimately, the price of open access and exchange on these platforms might be that users must put up with some negative and harmful material.

The platforms—and the news media—cannot police these networks alone. Authorities also have a responsibility to monitor and engage on social media, and to actively counter bad information with real-time streams of reliable information. Ultimately, the price of open access and exchange on these platforms might be that users must put up with some negative and harmful material.

However, just because these issues are complex does not mean that platforms cannot adapt their policies and practices. It might be that a virtual switch could be put in place to delay live feeds that contain violence. More “honest broker” entities such as Storyful or verification agency First Draft might emerge to act as specialist filters around terror events. One suggestion has been that platforms like Facebook should hire teams of fact-checkers. Another is that they should hire senior journalists to act as editors.

Of course, those last suggestions would make these self-declared tech companies more like news media. But we now inhabit what Royal Holloway media professor Andrew Chadwick calls a “hybrid media” environment where those distinctions are blurred. News organizations have had to change to adapt to social networks, and platforms, too, must continue to develop the way they behave in the face of breaking news.

Companies such as Facebook and Google are already reaching out to journalists and publishers to find ways of working that combine their strengths. Twitter and Facebook, for example, have joined 20 news media organizations in a coalition organized by First Draft to find new ways to filter out fake news.

Platforms and the news media both have much to gain in terms of trust and the avoidance of enforced regulation or control by taking the initiative instead of waiting for angry governments to act and impose solutions that hurt creativity and freedom in the name of security. One only has to glance at more repressive regimes around the world to see the price paid for democracy when reactionary governments restrict any form of media in the name of public safety.

This article is adapted from a Tow report by the same author. It is part of a series on journalism and terrorism that is the product of a partnership between the Tow Center for Digital Journalism and Democracy Fund Voice.

Charlie Beckett is a professor in the Department of Media and Communications at the London School of Economics and founding director of Polis, the LSE’s international journalism think-tank. Before joining the LSE, he was a journalist at the BBC and ITN. He is the author of SuperMedia and WikiLeaks: news in the networked era.