Fake news isn’t new.

Think back to Hurricane Sandy four years ago, when incredible amounts of false content circulated, including claims the NYSE was flooded and sharks appeared on flooded streets in New Jersey. At the time, there was much debate about how to deal with these issues. The New Yorker’s Sasha Frere-Jones called Twitter a “self-cleaning oven,” suggesting that false information could be flagged and self-corrected almost immediately. We no longer had to wait 24 hours for a newspaper to issue a correction.

Post presidential election, we are reckoning with the scale of misinformation circulating online, enabled by social platforms such as Facebook and Twitter. As we look to fix our broken information ecosystem, the most frequent suggestion is platforms should hire hundreds of editors who work in multiple languages and decide what should or should not be seen. But, as Jeff Jarvis wrote over the weekend, we need to be careful what we wish for: We don’t want Facebook to become the arbiter of truth.

Instead, I would encourage the social platforms to include prominent features for filtering and flagging. They should work with journalists and social psychologists to invent a new visual grammar so that when content is fact-checked, debunked, corrected, or verified, those processes are transparent and available to anyone seeking to understand more about the origins of a story.

Related: Eight steps reporters should take before Trump assumes office

One way this could work in the form of a watermark, embedded with the original piece of content. It is dispiriting seeing a false claim garner thousands of retweets while the separate correction collects only 20 or so. These watermarks would work like a spam filter, making it more likely I would see authenticated content on my feed rather than fake content. Some fake content might slip through, and some authentic content might be marked as “spam,” but like my email inbox, I’ll take the imperfections if it improves my access to information.

We have much bigger problems than just the fake news sites circulating on Facebook–this is a concern for news organizations using social media to discover content, as well. To begin to develop a grammar of fake news, I collected six types of false information we’ve seen this election season.

1. Authentic material used in the wrong context

Donald Trump’s first campaign ad purported to show migrants crossing the border from Mexico, when the footage was actually migrants crossing from Morocco to Melilla in North Africa. This content isn’t fake, but the context is wrong.

In the weeks leading up the election, a video emerged that appeared to show ballot-box stuffing. As Alastair Reid from First Draft News, of which I am a member, explained, the date stamp in the top left hand corner shows it was captured on September 18, the date of elections in Russia. A quick Google reverse image search also confirms the origin of the footage. Again, the content isn’t fake, the context is wrong.

2. Imposter news sites designed to look like brands we already know

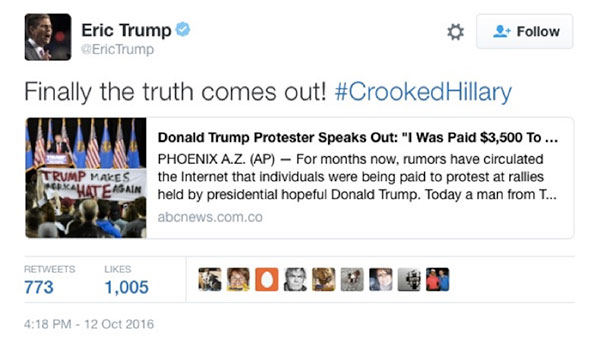

Eric Trump and campaign spokesperson Kellyanne Conway both retweeted this fake ABC news site:

If you look closely at the URL, you see it’s abc.com.co, which is not an official ABC News domain. The New York Times and Daily Mail have also recently been copied. Clone Zone is a site that makes this incredibly easy to do.

NowThis was also the victim of a hoax; someone used NowThis branding to create a fake video in early October. Because NowThis publishes entirely on social media and does not have a destination website, it corrected this perception on the social web. As more brands live in a purely distributed environment, the social networks have a responsibility to find a way for corrections to travel with content.

3. Fake news sites

Out of all these types of misleading content, fake news sites have been subject to the most scrutiny since the election. Work by BuzzFeed journalist Craig Silverman over the past few weeks has highlighted Macedonian teenagers creating fake news articles purely to make money. His most recent analysis shows how much engagement fake news articles gathered on Facebook.

The infamous Pope endorsement story originated on WTOE 5 News, which describes itself as a “fantasy news site” on its “About” page–one click too many for most users. There should be ways to flag such sites that are blatantly creating fake stories.

Brian Forde, a researcher at MIT, recently compared fake news with email spam. We accept the need for junk folders despite occasionally losing “good” emails, he said, because the alternative is drowning in a sea of spam. It seems that social media users would benefit from some automated process that mark sources as fake. If users ever want to dive into that junk feed they can, but they probably won’t.

Related: Journalism’s moment of reckoning has arrived.

4. Fake information

Besides fake news sites, fake information is also frequently presented in graphics, images, and video. Designed to be highly shareable, these memes fill up newsfeeds and are often so creative and convincing in their delivery that most users do not think to question their authenticity, let alone know how or where to start checking.

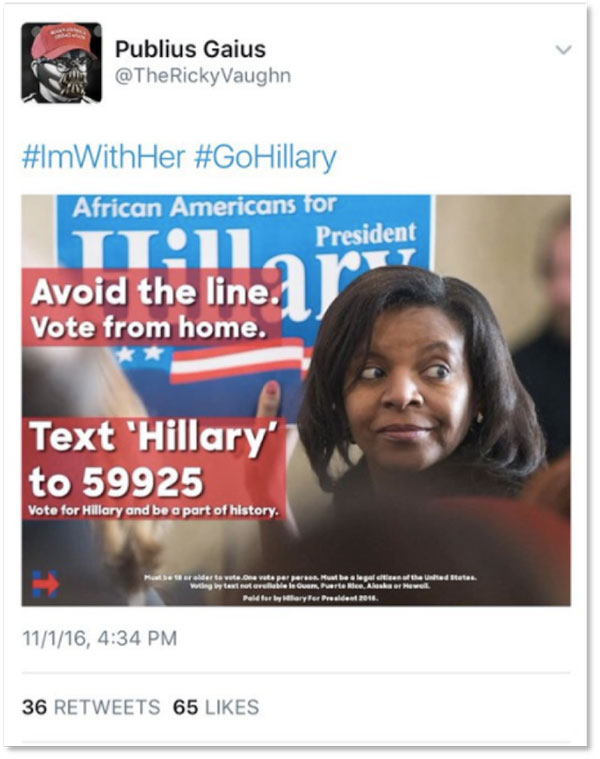

These images were circulating online just before the US election, incorrectly claiming people could stay at home and vote via text.

5. Manipulated content

Images and videos that have been deliberately manipulated are a huge part of the news ecosystem. Because they can be created easily by bedroom hoaxers, they are often trivialized and dismissed as merely mischievous. But just as prank calls are no longer funny when made to an emergency service, photoshopped hoaxes are no longer harmless when they relate to an election, terror attack, or humanitarian crisis.

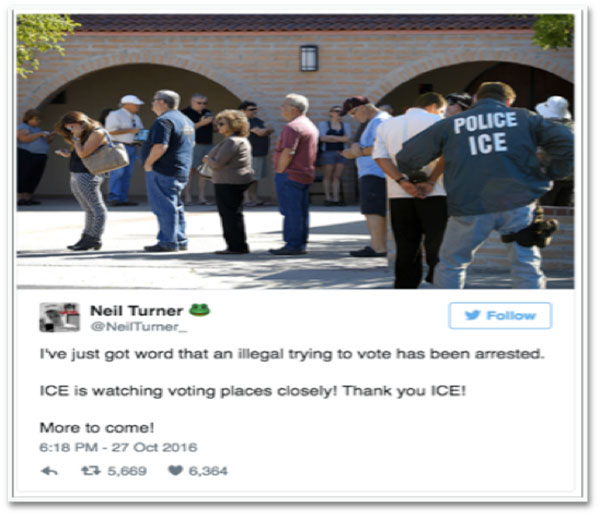

This photograph surfaced online a couple of weeks before the US election and appears to show an ICE official making an arrest at a voting station.

A simple reverse image search shows that the two men were edited into the original photograph, which was actually taken in Arizona during the primaries in March. Again, this example highlights the importance of finding a way for corrections to travel with fake content. Expecting every user to perform the same verification checks is not realistic or efficient.

6. Parody content

Parody content makes it hard to think about creating algorithmically driven rules to label fake content. (Although, it would certainly be possible to create a database of satirical sites all over the world. This might even help people who fall for The Onion.)

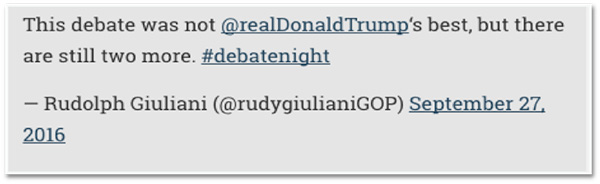

When Chuck Todd interviewed Rudy Giuliani on Meet the Press, he pushed Giuliani about a tweet he had sent in reference to the first debate:

When Giuliani made it clear he hadn’t tweeted this, Todd was forced to explain to viewers that the tweet had actually come from a parody account, which describes itself as a pastiche of the former mayor in its bio.

Related: Bad headlines editors probably wish they could take back