Sign up for the daily CJR newsletter.

With advancements in AI tools being rolled out at breakneck pace, journalists face the task of reporting developments with the appropriate nuance and context—to audiences who may be encountering this kind of technology for the first time.

But sometimes this coverage has been alarmist. The linguist and social critic Noam Chomsky criticized “hyperbolic headlines” in a New York Times op-ed. And there have been a lot of them.

“Bing’s A.I. Chat: ‘I Want to Be Alive. 😈’” “‘Godfather of AI’ says AI could kill humans and there might be no way to stop it.” “Could ChatGPT write my book—and feed my kids?” “Meet ChatGPT, the scarily intelligent robot who can do your job better than you.” “Microsoft’s new ChatGPT AI starts sending ‘unhinged’ messages to people.’’ “What is AI chatbot phenomenon ChatGPT and could it replace humans?”

In order to better understand how ChatGPT is being covered by newsrooms, we interviewed a variety of academics and journalists on how the media has been framing coverage of generative AI chatbots. We also pulled data on the volume of coverage in online news using the Media Cloud database and on TV news using data from the Internet TV News Archive, which we acquired via The GDELT Project’s API, in order to get a sketch of the coverage so far.

News reporting of new technologies often takes the pattern of a hype cycle, said Felix M. Simon, a doctoral researcher at the Oxford Internet Institute and Tow Center fellow. First, “It starts with a new technology which leads to all kinds of expectations and promises”. ChatGPT’s initial press release promised a chatbot that “interacts in a conversational way”. Next, media coverage branches into two extremes: “We have people say it’s the nearing apocalypse for industry XYZ or democracy,” or, alternatively, “it promises all kinds of utopias which will be brought about by the technology,” Simon said. Finally, after a few months, a more nuanced period of coverage—away from catastrophe or utopia—to discuss real-world impacts. “That’s when the cycle starts to cool off again.”

But coverage of generative AI chatbots like ChatGPT seems unlikely to be cooling off anytime soon.

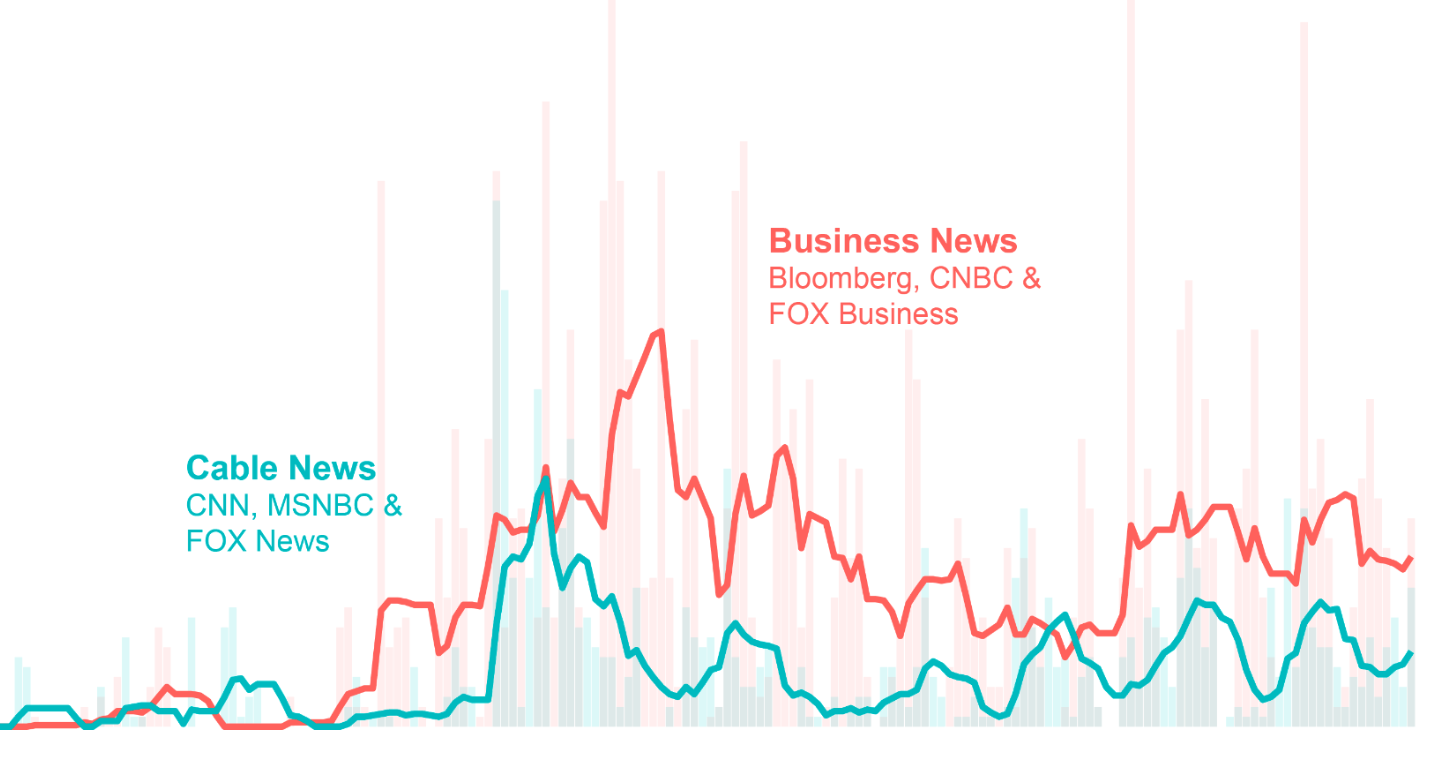

Click to expand. Online news sites started covering ChatGPT significantly about two months after its release. Credit: Media Cloud / Tow Center

OpenAI launched ChatGPT to the public on the last day of November 2022, and within just a few days, the site had over a million users. While the media did take notice early, it wasn’t until January and February of 2023 that online news coverage really started to pick up. That was around the time that BuzzFeed announced it would be using ChatGPT for content creation, Microsoft integrated a ChatGPT-powered chatbot into its Bing search engine and Google announced its challenger to ChatGPT, Bard.

For Subramaniam Vincent, director of the Journalism and Media Ethics program at the Markkula Center for Applied Ethics at Santa Clara University, one recurring issue with media coverage of this technology is “that it tends to be led by what the companies say this technology is going to do.” That’s a structural problem not tied just to ChatGPT. Moreover, he added, “the CEOs of these companies go to Twitter and social media and start making their own claims to control the narrative about AI.”

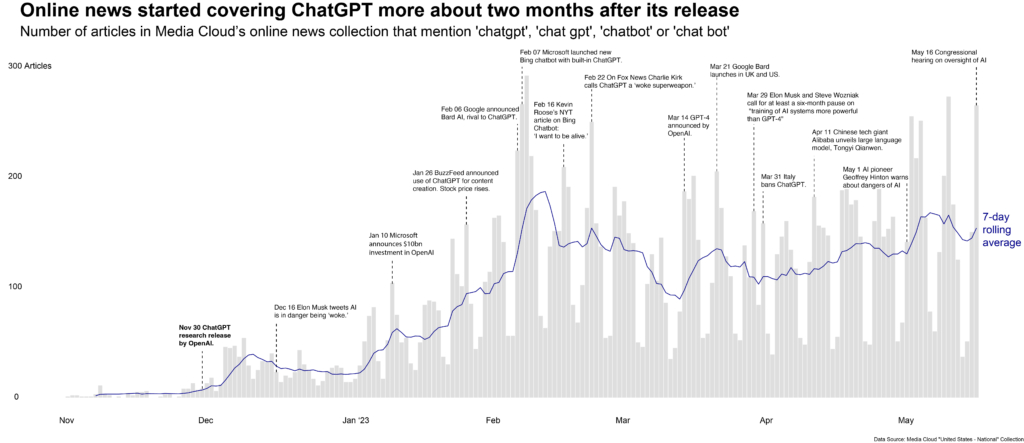

Early 2023 was also roughly when television stations began to air nearly daily stories of the latest developments around chatbots from OpenAI, Google and Microsoft, according to data we pulled from the Internet TV News Archive using The GDELT Project’s interface.

Click to expand. On TV, business news is covering ChatGPT more than cable news. Credit: The GDELT Project / Tow Center

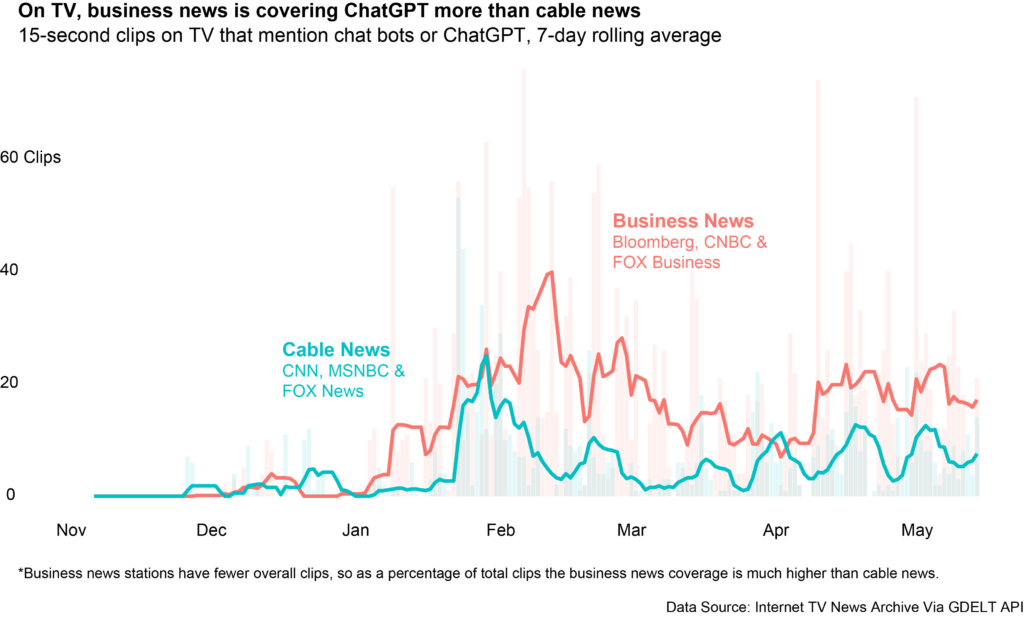

Business news channels have maintained a steady clip of stories about these companies’ activities around generative AI chatbots. CNBC is leading the pack in terms of volume of coverage.

Meanwhile, cable news channels had less coverage of the chatbots relative to business news. Among the big three networks, CNN and Fox News have platformed ChatGPT more than MSNBC. Both CNN and Fox News have looked at the impact of generative AI on education, the workplace and jobs. The latter has also raised concerns about political bias. In one case, a host decried ChatGPT as a “woke superweapon.” Fox’s coverage also frequently mentioned Elon Musk, who has among other comments said that ChatGPT was in danger of becoming “woke” and later urged a six-month hiatus on developing AI tools.

Click to expand. ChatGPT coverage across business and cable TV news varied by network. Credit: The GDELT Project / Tow Center

According to a Fox News poll of voters conducted in April, about half say they are either not very or not at all familiar with AI programs like ChatGPT, making accurate news coverage all the more important. And some coverage across TV and online news has been nuanced, seeking to inform audiences about how to navigate the new technology, identify hallucinations, and double check statements the AI produces. Reporters have also delved into issues of algorithmic bias, ethical considerations, the spread of misinformation, and possibilities for regulating misuse. Still other coverage continues to feel like science fiction —promising everything from the end of work to the destruction of humanity—and translating uncertainty into fear rather than understanding.

A hype cycle?

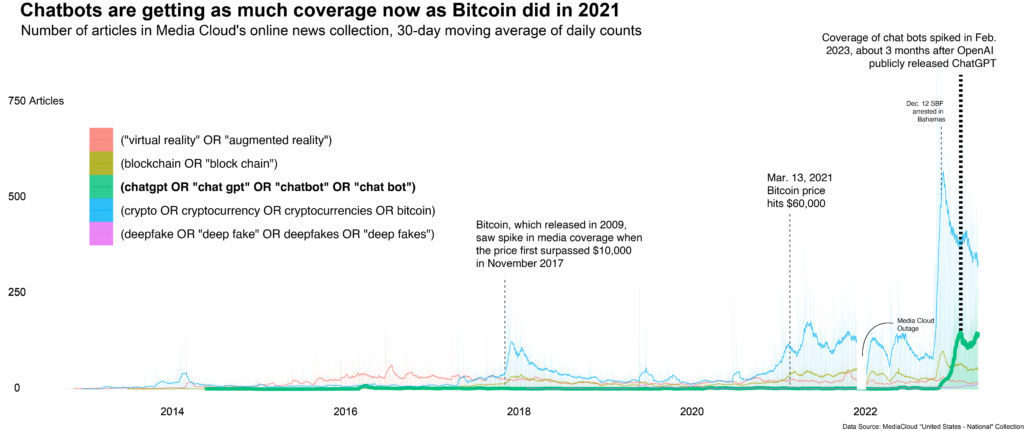

While it seems as if ChatGPT is ushering in a new era, there are also faint echoes of the coverage of Bitcoin and the promise of cryptocurrencies to change banking and commerce as we know it. Data from Media Cloud’s news database suggests that just six months since launching, ChatGPT is already seeing similar airtime to that given to cryptocurrencies in 2021, when Bitcoin prices peaked, over a decade after it was released to the public in 2009.

Some observers have felt dissatisfied with the media coverage. “Are we in a hype cycle? Absolutely. But is that entirely surprising? No,” said Paris Martineau, a tech reporter at The Information. The structural headwinds buffeting journalism—the collapse of advertising revenue, shrinking editorial budgets, smaller newsrooms, demand for SEO traffic—help explain the “breathless” coverage—and a broader sense of chasing content for web traffic. “The more you look at it, especially from a bird’s eye view, the more it [high levels of low-quality coverage] is a symptom of the state of the modern publishing and news system that we currently live in,” Martineau said, referring to the sense newsrooms need to be covering every angle, including sensationalist ones, to gain audience attention. In a perfect world all reporters would have the time and resources to write ethically-framed, non-science fiction-like stories on AI. But they do not. “It is systemic,” she added.

Click to expand. Chatbots are getting as much coverage now as Bitcoin in 2021. Credit: Media Cloud / Tow Center

It’s possible to get a sketch of how the coverage of ChatGPT compares to other new technologies. As the chart above shows, coverage of ChatGPT is already significantly outstripping a range of other hyped technologies like “virtual reality” and “deep fakes,” although coverage of “cryptocurrency” is much higher (particularly after the collapse of FTX).

Why could it be that the volume of ChatGPT coverage has overtaken that of other new technologies like VR and “deep fakes”? “One thought I have is that because this new tool has direct implications for journalism, that could be one reason why there’s been such an overwhelmingly huge amount of attention in the media,” said Jenna Burrell, director of research at Data & Society. “I would guess that’s part of it.” Another is that ChatGPT and other generative AI tools have greater potential to upend the creative worlds as we know it.

Perhaps there is an argument that unlike cryptocurrencies, chatbots and large language models wield real potential to change society. Already we can see the ways in which ChatGPT is transforming education and being incorporated into the day-to-day workflows of knowledge workers in a large range of sectors.

But what’s concerning for Burrell has been the framing of much of this reporting. “I’ve taken a lot of [media] requests and have felt that there was a need for some clarity about how these technologies work, and a need to fight some of the really outrageous hype,” she said. There’s been an anthropomorphic tendency towards “attributing, thinking, knowing, writing, and innovating to this non-human tool,” for instance the story by the New York Times claiming Bing’s chatbot wanted to “be alive”.

One concern with this framing is that the public gets the science-fiction version of the AI story—like some of the follow-up coverage of AI pioneer Geoffrey Hinton’s interview on its dangers—and the public ends up being cut out of the important discussions around ethics, usage and the future of work.

“It’s the Hollywood-ification of the public’s understanding of AI,” said Nick Diakopoulos, associate professor in Communication Studies and Computer Science at Northwestern University. We have an image of active robots from movies. “You would hope that the news coverage wouldn’t simply just bolster that kind of entertaining view of the technology that it would take a little bit more of a critical look.”

Towards better coverage

How could we imagine better media representations of generative AI going forward? For Burrell of Data & Society, who thinks we’re still in the hype phase of the cycle on generative AI chatbots, more sober coverage is needed to cover the issues that matter. One story that seems to have gotten lost is the “incredible consolidation of power and money in the very small set of people who invested in this tool, are building this too, are set to make a ton of money off of it.” We need to move away from focusing on red herrings like AI’s potential “sentience” to covering how AI is further concentrating wealth and power.

More sober reporting about what these tools do and how they work is needed to cut through the fog of science fiction. Generative AI tools like ChatGPT, trained on immense amounts of data, are skilled at guessing the next word in a sentence sequence but don’t “think” in the ways humans do. “So it’s literally just walking down the line statistically, looking at the statistical distribution of words that have already been written in the text, and then adding one next word,” Diakopoulos said. More reporting should outline how these technologies actually work—and don’t.

That means who gets to train these models—and what flaws and biases will be potentially baked in—are questions newsrooms need to be covering. Moreover, editors need to reevaluate whose comments on generative AI are considered newsworthy.

Sensationalized coverage of generative AI “leads us away from more pressing questions,” Simon of the Oxford Internet Institute said. For instance, the potential future dependence of newsrooms on big tech companies for news production, the governance decisions of these companies, the ethics and bias questions relating to models and training, the climate impact of these tools, and so on. “Ideally, we would want a broader public to be thinking about these things as well,” Simon said, not just the engineers building these tools or the “policy wonks” interested in this space.

Newsrooms should lay down ground rules, perhaps in their style guides, to work out coverage strategies moving forward, said Martineau, of The Information, for example: no anthropomorphising chatbots. This parameter-setting “could help cool the fires of this hype cycle,” she said.

Has America ever needed a media defender more than now? Help us by joining CJR today.