Sign up for the daily CJR newsletter.

You’ve probably heard that news organizations such as AP, Reuters, and many others are now turning out thousands of automated stories a month. It’s a dramatic development, but today’s story-writing bots are little more than Mad Libs, filling out stock phrases with numbers from earnings reports or box scores. And there’s good reason to believe that fully automated journalism is going to be very limited for a long time.

At the same time, quietly and without the fanfare of their robot cousins, the cyborgs are coming to journalism. And they’re going to win, because they can do things that neither people nor programs can do alone.

Apple’s Siri can schedule my appointments, Amazon’s Alexa can recommend music, and IBM’s Watson can answer Jeopardy questions. I want interactive AI for journalism too: an intelligent personal assistant to extend my reach. I want to analyze superhuman amounts of information, respond to breaking news with lightning reflexes, and have the machine write not the last draft, but the first. In short, I want technology to make me a faster, better, smarter reporter.

Let’s call this technology Izzy, after the legendary American muckraker I. F. Stone, who found many of his stories buried in government records. We could build Izzy today, using voice recognition to drive a variety of emerging journalism technologies. Already, computers are watching social media with a breadth and speed no human could match, looking for breaking news. They are scanning data and documents to make connections on complex investigative projects. They are tracking the spread of falsehoods and evaluating the truth of statistical claims. And they are turning video scripts into instant rough cuts for human review.

‘Izzy, find breaking news.’

“This is a speed game, clearly, because that’s what the financial markets are looking for,” says Reg Chua, executive editor for data and innovation at Reuters. Reuters produced its first automated stories in 2001, publishing computer-generated headlines based on the American Petroleum Institute’s weekly report. That report contains key oil production figures closely watched by energy traders who need new information as fast as possible, certainly faster than their competitors. Automation is a no-brainer when seconds count. Today, the news organization produces automated stories from all kinds of corporate and government data, something like 8,000 automated items a day, in multiple languages.

Automated systems can report a figure, but they can’t yet say what it means; on their own, computer-generated stories contain no context, no analysis of trends, anomalies, and deeper forces at work. Reuters’s newest technology goes deeper, but with human help: It still writes words, but isn’t meant to publish stories on its own. Reuters’s “automation for insights” system, currently under development, summarizes interesting events in financial data and alerts journalists. Instead of supplying what Chua calls “the headline numbers—the index was at this number, up/down from yesterday’s close,” the machine surfaces “more sophisticated analyses, the biggest rise since whenever, that sort of thing.”

(Daniel Zender)

The system could look for changes in analysts’ ratings, unusually good or bad performance compared to other companies in the same industry, or company insiders who have recently sold stock. Rather than being a sentence generator, it’s meant to “flag journalists to things that might be of interest to them,” says Chua, “helpfully done in the form of a sentence.”

But not all breaking news comes through financial data feeds, so Reuters’s most sophisticated piece of automation finds news by analyzing social media. Internal research showed that something like 10 or 20 percent of news breaks first on Twitter, so the company decided to monitor Twitter. All of it.

At the end of 2014, Reuters started a project called News Tracer. The system analyzes every tweet in real time—all 500 million or so each day. First it filters out spam and advertising. Then it finds similar tweets on the same topic, groups them into “clusters,” and assigns each a topic such as business, politics, or sports. Finally it uses natural language processing techniques to generate a readable summary of each cluster.

There have been social media monitoring systems before, mostly built for marketing and finance professionals. DataMinr is a powerful commercial platform that also ingests every tweet, and there’s quite a bit of overlap with Reuters’s internal tool, which is good news for journalists who don’t work at Reuters. But News Tracer was built from the ground up for reporters, and perhaps what most distinguishes it are the “veracity” and “newsworthiness” ratings it assigns to each cluster.

Newsroom standards are rarely formal enough to turn into code. How many independent sources do you need before you’re willing to run a story? And which sources are trustworthy? For what type of story? “The interesting exercise when you start moving to machines is you have to start codifying this,” says Chua. Much like trying to program ethics for self-driving cars, it’s an exercise in turning implicit judgments into clear instructions.

Quietly and without the fanfare of their robot cousins, the cyborgs are coming to journalism. And they’re going to win, because they can do things that neither people nor programs can do alone.

News Tracer assigns a credibility score based on the sorts of factors a human would look at, including the location and identity of the original poster, whether he or she is a verified user, how the tweet is propagating through the social network, and whether other people are confirming or denying the information. Crucially, Tracer checks tweets against an internal “knowledge base” of reliable sources. Here, human judgment combines with algorithmic intelligence: Reporters handpick trusted seed accounts, and the computer analyzes who they follow and retweet to find related accounts that might also be reliable.

“A bomb goes off in a certain place, and then ultimately the verified account of that police station says, or the mayor’s office says, or the verified account of the White House says,” explains Chua. If Reuters has that, it might be ready to run the story, and a reporter should know it.

News Tracer also must decide whether a tweet cluster is “news,” or merely a popular hashtag. To build the system, Reuters engineers took a set of output tweet clusters and checked whether the newsroom did in fact write a story about each event—or whether the reporters would have written a story, if they had known about it. In this way, they assembled a training set of newsworthy events. Engineers also monitored the Twitter accounts of respected journalists, and others like @BreakingNews, which tweets early alerts about verified stories. All this became training data for a machine-learning approach to newsworthiness. Reuters “taught” News Tracer what journalists want to see.

The results so far are impressive. Tracer detected the bombing of hospitals in Aleppo and the terror attacks in Nice and Brussels well before they were reported by other media. Chua estimates the tool enabled Reuters to begin its reporting eight to 60 minutes ahead of the competition, a serious head start.

For Chua, the significance of Tracer is not just what the machine can do, but what it frees reporters to spend more time doing: “Talk to people, pose questions that haven’t been posed before, make leaps of insight that machines don’t do as well.”

Cyborg logic

It’s tempting to ask our hypothetical reporter AI to work on its own. “Izzy, investigate this data,” we’d want to say, but it will be a long time before a computer can write anything but the most formulaic stories by itself.

In 2012, Michael Sedlmair, an assistant professor in the Visualization and Data Analysis Group at the University of Vienna, co-published a paper that helps explain why so many things are so hard for computers to do.

“The problem is the problem,” says Sedlmair. “The underlying assumption behind automatic approaches is that the problem is well defined. In other words, we know exactly what the task is and have all the data available to solve the problem.”

Sedlmair sorts problems on a diagram with two axes. The first axis is task clarity, meaning how well defined the problem is. Buying a train ticket, deciding whether an email is spam, or checking whether someone’s name appears in a database are clear problems with clear solutions.

But lots of really interesting problems—including most of those posed by journalism—are not at all clear. There’s no one-size-fits-all recipe for doing research, following a hunch, or seeing a story in the data. As Sedlmair puts it, “there is no single optimal solution to these problems.”

What if a computer could monitor every word uttered by politicians and media alike, to trace the genesis and spread of falsehoods?

The other axis represents the location of the information needed to solve the problem. Computer scientists frequently assume that all necessary information is already stored in the computer as data, but this is rarely true in journalism. “To fulfill certain tasks, data often needs to be combined with information that is not computerized but still ‘locked’ in people’s heads,” says Sedlmair.

Consider the Wall Street Journal story that revealed EMC Corporation’s CEO routinely used company jets to fly to his vacation homes. This came from analysis of FAA flight records, but the data is not a story until you combine it with some additional information, namely, the locations of the CEO’s vacation homes, and the fact that he’s not supposed to be using these jets for personal travel. That information was in the reporter’s head, perhaps gleaned from interviews or late nights spent reading disclosure documents. There will never be an algorithm that can find this story—and many others that might be lurking there—from FAA data alone, for the simple reason that identifying the suspicious flight pattern requires information that is not in the data.

“Fully automatic solutions are good when the task is clear and we have all, or at least most of the necessary information to solve this task available on our computer,” says Sedlmair. For everything else—for most of journalism—we need human-machine cooperation.

Instead of asking computers to do human work, we must learn to meet them in the middle, breaking our reporting work into relatively concrete, self-contained tasks. Fortunately, that’s still extraordinarily useful.

‘Izzy, look for similar crimes.’

“Crime methods are not infinite,” says Organized Crime and Corruption Reporting Project co-founder Paul Radu. Techniques for hiding money and ownership get copied from place to place, spreading through underground networks.

Based in Sarajevo, OCCRP has grown into a network of more than 150 journalists in 30 countries. Collectively, they’ve traced billions of dollars in laundered money and bribes across Eastern Europe and Russia. Radu once posed as a slave buyer to infiltrate a human trafficking ring, but these days OCCRP reports almost entirely with documents, mostly public records they use to trace ownership across borders and through shell companies in tax havens. This makes their work particularly suitable for computer assistance.

The project has already invested heavily in tools to manage their document and data collection. Their goal now is to teach a computer to find crimes in the data.

Suppose a reporter working in one city discovers that the local government issues building contracts much more quickly to a particular construction company, taking two months instead of the average nine-month turnaround time. On further investigation, the reporter finds a family link between the company with the fast contracts and a government official, and writes a story. The logical next step is to look for more firms, perhaps in other cities, whose permits are approved unusually quickly. A reporter might also learn that a series of contracts was awarded to companies owned by Panamanian concerns incorporated only days before, all represented by the same law firm. This may turn out to be a cookie-cutter strategy for hiding the true payee, meaning other reporters should also be looking out for vendors owned by recently established Panama companies.

(Daniel Zender)

OCCRP is in the early stages of this “Crime Pattern Recognition” project. The concept is this: Reporters in the OCCRP network will submit narrative descriptions of the data pattern that led to their story, which programmers will then turn into search queries. Those queries will run continuously against incoming documents and data published by governments or obtained by OCCRP reporters from a wide variety of sources, including leaks and bulk FOIA requests.

“It’s quite important to use the knowledge of the local reporter,” says Radu. Then other journalists can look for similar crimes, aided by the computer. “The machine has a very important role, because it can spit out, ‘Hey look, this is another instance of the same type of corruption. So you should probably investigate this.’ My hope is journalists will subscribe to this and say, let’s see what I can do today. Is there an alert for me?”

Radu emphasizes that this system generates leads, not stories. “It’s important for the investigator to verify, to check, to really do the investigation.”

OCCRP software developer Friedrich Lindenberg puts the limitations of data analysis this way: “If perfect data were available for the world economy [we could] just replace reporters with pattern-detection machines, sort crimes by how politically relevant they are, and write it up from the top….[But] the world at large is still opaque and requires human labor, creativity, and judgment to make links between people, companies, and assets.”

OCCRP succeeds in part because the organization is meticulous about collecting data. If we’ve learned anything from the past 20 years of AI research, it’s that data makes the difference. Machine translation was impossible without huge quantities of the same text in multiple languages, and each self-driving car learns from the collective driving experience of all self-driving cars. As news organizations become more comfortable working with large and diverse data sources, previously impossible feats, such as finding all instances of certain kind of corporate shell game, will become straightforward.

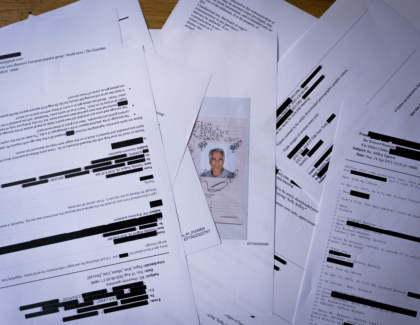

Most news organizations don’t systematically collect and exploit their data. Groups like OCCRP and the International Consortium of Investigative Journalists are the exceptions: They see the value of data and are slowly amassing large quantities of it relevant to trans-national investigative journalism, such as the Panama Papers. Data comes from a variety of sources with all sorts of conditions attached. There are major technical, legal, and ethical hurdles to making these internal archives more widely available, but even broadly available public data can be massively useful. If you can collect and analyze it, you can do things that have never been possible before.

‘Izzy, check everything they say.’

During the first debate between Clinton and Trump, NPR employed a team of 20 reporters to analyze and fact check the transcript in real time. What if a computer could monitor every word uttered by politicians and media alike, to trace the genesis and spread of falsehoods?

The UK-based fact-checking organization Full Fact recently published a comprehensive report noting that while fully-automated fact checking is still many years away, machine-assisted fact checking is already here, and Full Fact is doing it. “Everybody either underestimates what’s possible or overestimates what’s possible,” says Full Fact director Will Moy. “There are people who think it’s all complicated technology, you can’t do anything right now, and there are people who think we’ll ultimately just have AI and all of this will just happen, which is a promise that has never come true.”

Moy sees fact checking as a four-stage process, each of which is potentially automatable: monitor media across a wide variety of sources; identify factual claims; check whether they’re true; and publish the verdict.

Full Fact has had a monitoring system in beta since early this year, automatically analyzing both the UK parliamentary record and stories scraped from several dozen mostly UK news websites, from the BBC to The Guardian to Breitbart. The goal is to catch the entire life cycle of every falsehood: to watch lies come into existence, spread, and hopefully die out as a result of Full Fact’s efforts. By observing this ecosystem, the organization hopes to fine-tune its strategy.

I want to analyze superhuman amounts of information, respond to breaking news with lightning reflexes, and have the machine write not the last draft, but the first.

“We’re pretty unusual among fact checking organizations because we go to people who make mistakes and ask them to correct the record,” says Moy. “For example, we have had the prime minister publish formal corrections in the Hansard [the UK’s official parliamentary record], we’ve had newspapers publish corrections, and that sort of thing.”

Full Fact’s internal platform works by continuously monitoring online sources for references to previously debunked claims using hand-crafted search queries. It’s simple but effective. The next step, now running as a proof-of-concept, is automated detection and checking of general statistical claims.

“Statistical claims very often take recognizable patterns: ‘X is rising’ is a classic kind of statistical claim,” says Moy. It’s the sort of pattern a computer could identify, then check against public data.

Full Fact maintains a database of official sources for important statistics, and is working with UK government offices to ensure that their data is machine readable. The hope is that a computer will soon be able to find the primary source for a statistical claim on its own. But there are all kinds of reasons why simply looking at the numbers can be misleading. Moy points to a recent spike in the number of marriage licenses issued in Sweden. Rather than a summer of love, it turned out that the government had changed certain tax rules. Often, measurement methods change over time or differ between regions, making direct comparisons difficult. Software that cannot interpret such footnotes will come to bad conclusions.

Getting this kind of contextual analysis right will be a challenge for AI researchers, but it’s not a big problem for Full Fact because in the organization’s prototype system, the computer gets a human editor. The machine displays the questionable sentence side by side with the data that it believes disproves the claim, and the human fact checker goes from there.

Full Fact’s ultimate goal is to change the shape of public discourse. But to do this, it needs to know which false claims are most often repeated, and measure the effect of its interventions. “Claims only really matter when they’re repeated a lot, because it takes a lot of repetition for them to sink in,” says Moy. “It will be interesting to identify those claims as they keep churning away, shaping the debate, not necessarily on the front pages, that actually really cumulatively matter over time.”

‘Izzy, make a rough cut.’

Just as computers have found a niche producing automated stories from data, journalists are already using software to cut stock footage together. In goes the script, out comes an edit.

Israeli startup Wibbitz began with the idea of creating a “personal video newscaster.” In 2013 it launched an app that pulled articles from a variety of news sources, cut the text down to video script length, and read the stories in a synthesized voice accompanied by computer-selected images. The company has since added human curators and voice actors, and focused more on helping publishers produce their own videos quickly.

“What we’ve created in Wibbitz is a technology that can automate the majority of the production process,” says co-founder and CEO Zohar Dayan. The majority, not the entirety.

Wibbitz turns text articles into video rough cuts. First it creates a script by using natural language processing techniques to select key sentences. That is, the computer doesn’t actually write anything, it just edits the human to length. Then it scans the text for “entities,” which include people and companies, but also things like sports leagues and notable events. It assembles a video by searching for footage of those entities in licensed sources such as Getty Images and Reuters, or the publisher’s own archives. These repositories, with their carefully tagged images and videos, are the critical data source that underlies the intelligence.

Even broadly available public data can be massively useful. If you can collect and analyze it, you can do things that have never been possible before.

The point is scalable video production, which sits squarely in the digital media zeitgeist. Wibbitz is one of a few computer-assisted video companies currently selling to media organizations, the other major one being Wochit.

“I could make a 35- to 40-second video, cradle to grave, in probably seven to 10 minutes,” says Neal Coolong, a senior editor for USA Today’s network of NFL sports sites. He supervises four people whose primary task is making videos with Wibbitz, each producing six or seven per day. All told, they add a video to about one in 10 articles published on the network. The videos are designed for sound-off viewing on phones and social media, with subtitles instead of narration.

Yet Wibbitz’s value may be more about workflow and less about sophisticated AI. Coolong prefers writing the video scripts himself to using the auto-summarization feature, and finds that the computer doesn’t always choose the best images. “For me there’s a certain way that I want to have it,” says Coolong, noting that Wibbitz does better with straight news writing, while his team more often does opinion pieces.

To get a feel for Wibbitz’s level of intelligence, I asked Dayan to demonstrate the system starting with a recent article on EpiPen price hikes. He copied five paragraphs of text from the story, from which the system generated a 94-second video in moments. It consisted entirely of similar shots of EpiPen manufacturer Mylan’s CEO, mostly taken from the same interview, plus one image of the Capitol building to go with a mention of attention from Congress. We were able to include shots of the product itself by searching for “EpiPen” within the editing interface.

Dayan says Wibbitz works best when the story is about popular people and things, suggesting that EpiPen price hikes are a bit niche. But he notes that the system learns from its users. During the Olympics, the system learned about previously obscure athletes because they were mentioned in articles processed by the platform. Knowing that Conor Dwyer is an American swimmer in the men’s 200-meter freestyle lets the computer pull up related swimming photos even if the script never says “swimming.” “The more content flows through the system, the smarter it gets, the better it knows how to make connection between certain types of things,” says Dayan. There’s the importance of data to train the computer, again.

Coolong believes that automatically generated videos will augment, not replace, traditional video production at USA Today. “We don’t have a TV crew on standby waiting for breaking news,” he notes. When the Colin Kaepernick story broke, for instance, Wibbitz allowed Coolong’s team to produce a video much more quickly than the couple hours it would have taken a human being to write up a script and shoot it. The big drawback of automated systems, of course, is that they can only use footage that has already been shot.

Building a cyborg

The titans of AI have so far ignored reporters. Google is working on self-driving cars, image understanding, and health applications. IBM’s Watson division has invested $1 billion in “cognitive computing” in the hope of selling software to practitioners of law, medicine, marketing, and intelligence. Journalism doesn’t make the list; it simply isn’t a big enough market.

But a few people are building AI for journalism anyway, or at least the component parts. I’ve described a few major examples, but dozens of simpler systems operate throughout journalism: alert systems that watch for court filings; automated campaign finance monitoring; and all manner of single-purpose bots that write stories about earthquakes or stock prices or crime waves.

We could build Izzy today. The pieces exist. With commercial voice recognition technology, we could tie them together to create a powerful reporter’s assistant, the other half of a human-plus-machine journalism team. What we’re lacking is a place to integrate all the features that journalist-programmers are already creating for their own work, and all the data they’re able to share. Without such a platform, newsroom developers’ work will remain fragmented.

Inspiration for Izzy might come from bots like Amazon’s Alexa, which can be upgraded by installing “skills.” Reporters should be able to add “organized crime” or “campaign finance” or “seismic monitoring” skills. And perhaps before long, we will. The individual skills are being worked out by innovative news organizations, one story at a time.

AI is definitely coming to journalism, but perhaps not the way you might have imagined. It will be a long time before a machine beats humans at reporting, but reporters augmented with intelligent software—cyborg reporters—have already pushed past the ancient limitations of a single human brain.

(Formerly iPython Notebook) is the data scientist’s fairytale come true: an interactive journal where you can mix notes and code, with the computer drawing the charts and filling in the tables automatically. Journalists use a lot of different software for data analysis, everything from traditional spreadsheets to specialized tools like the R programming language. Doing a story has always involved an awkward dance, going back and forth between different programs, struggling to keep track of multiple versions. No more. Doing an entire project in a single notebook fills me with delight. I end up with a step-by-step journal of my analysis and visualization work, complete with all my sketches and experiments. It’s intuitive to use, easy to dig up later when new data comes in, and you can publish the whole thing online, a godsend for journalistic transparency.

Has America ever needed a media defender more than now? Help us by joining CJR today.