Sign up for the daily CJR newsletter.

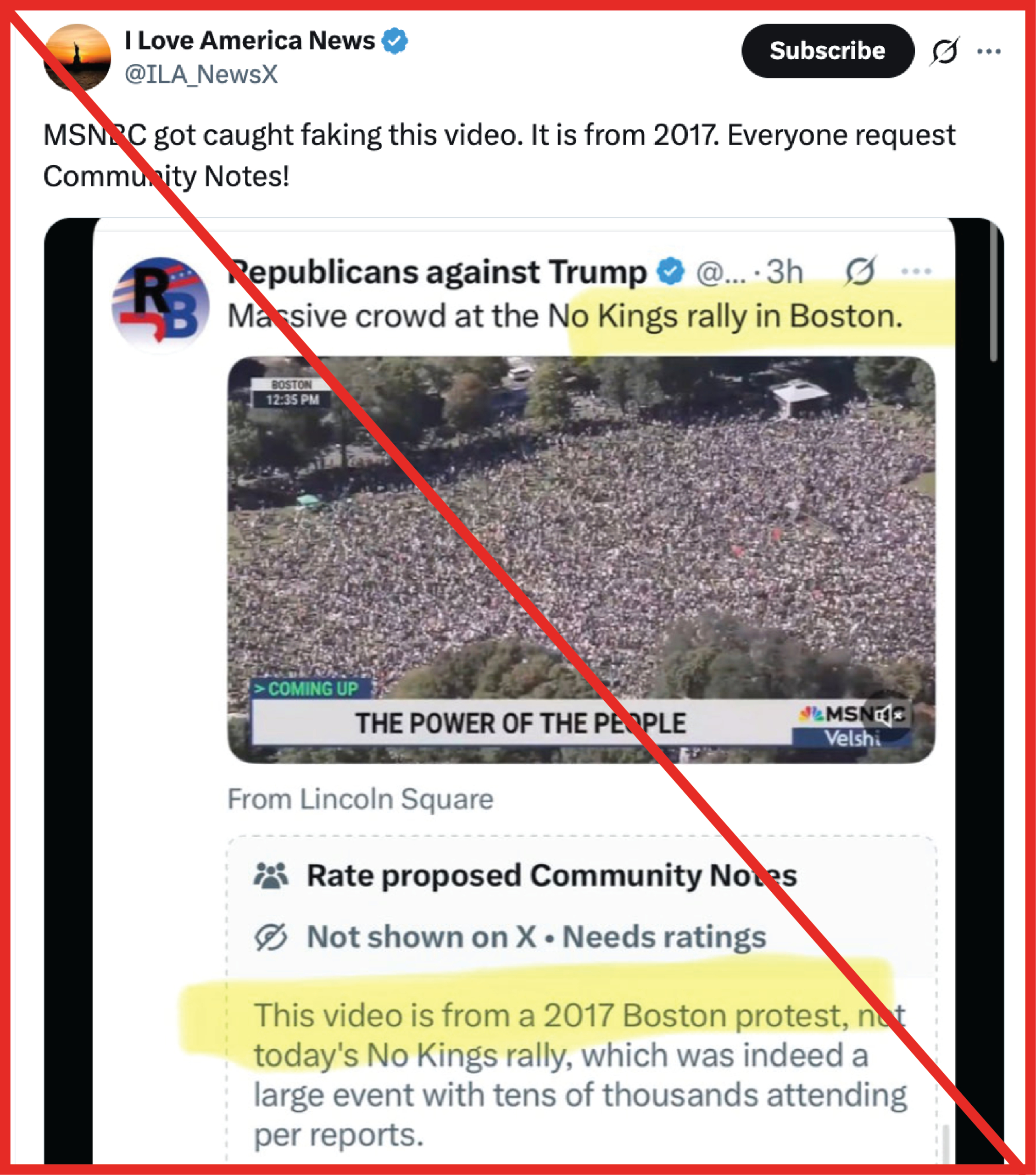

Last month, videos of the No Kings protests circulated online among extensive news coverage. One MSNBC clip on X, showing large crowds in Boston, was appended with a proposed Community Note stating the video was actually from 2017. The fact-checking note was still a draft and only visible to certain X users. It was never approved by X’s moderation system—but screenshots of it spread anyhow, providing the basis for a now-deleted tweet from Senator Ted Cruz. This sparked public accusations of manipulation against MSNBC. But the note was wrong. The video was from October’s No Kings protest, according to both NewsGuard and the BBC.

“We’ve definitely seen Community Notes in the past that have been inaccurate,” said Sofia Rubinson, a senior editor at NewsGuard who originally covered the No Kings note. “This is the first time I can remember a Community Note that was screenshotted before it was even fully rated that spread that false claim.”

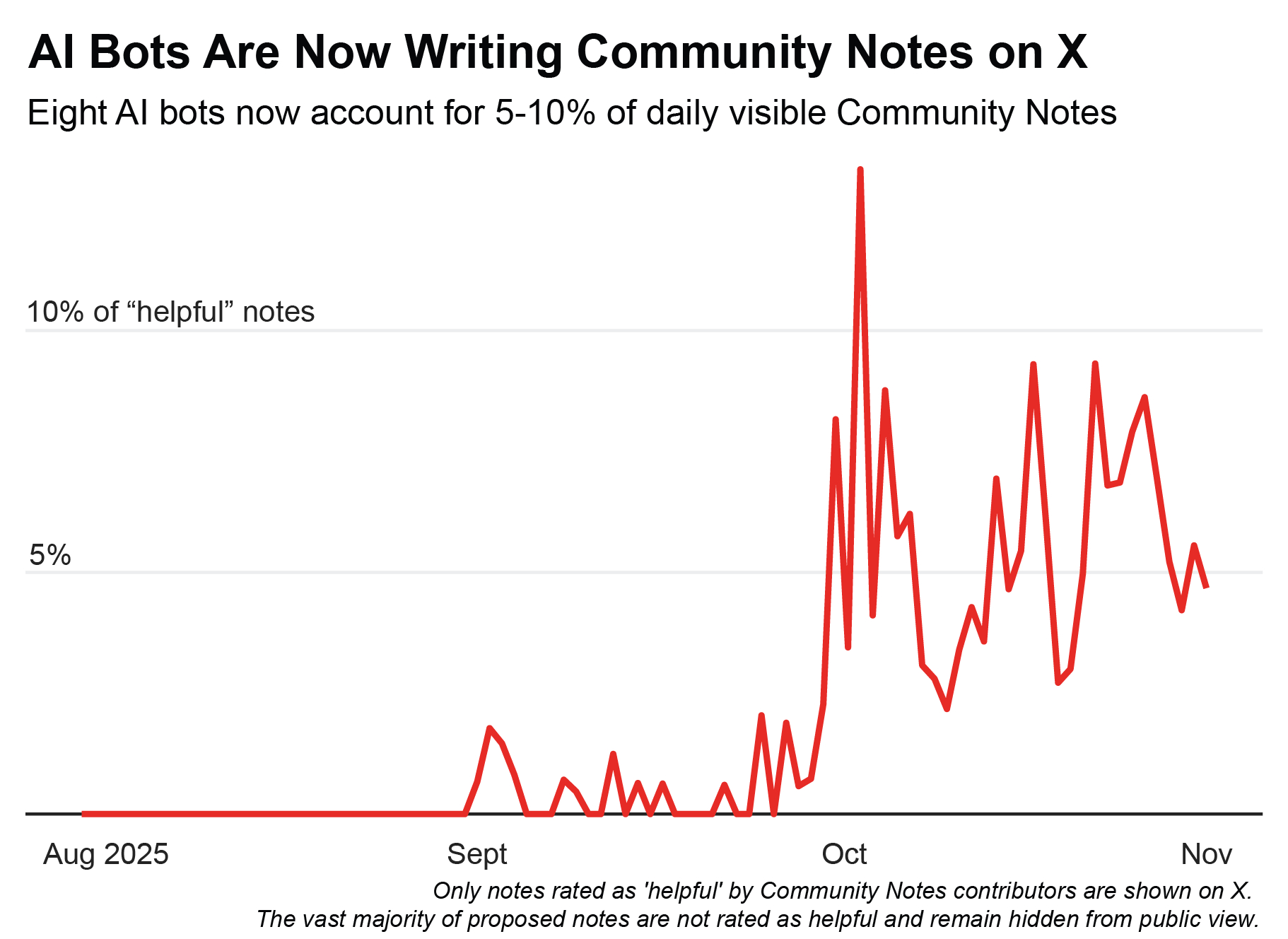

The age of professional fact-checking on social media is over. In 2022, not long after completing his purchase of what was then known as Twitter, Elon Musk laid off many of the staff responsible for fact-checking and pivoted to Community Notes. The program relies on users themselves to write notes on posts that add context or debunk misinformation. As of September, those notes can also be written by AI. The author of the No Kings note was the AI bot “Zesty Walnut Grackle,” one of Community Notes’ top contributors. A Tow Center analysis identified eight accounts, including that one, that officially use the new X AI Note Writer API. These eight accounts write between 5 and 10 percent of the Community Notes visible to the public each day. AI bots can be made by any user with a verified phone number and email address who is not already a part of the Community Notes program. The developers’ method for creating the bot is invisible to X.

Unlike traditional fact-checking, Community Notes require consensus from X’s users. For a note to appear on a tweet publicly, users who have disagreed on other Community Notes in the past must vote to agree that the note is helpful. If users cannot reach an agreement, the note is not displayed on the tweet. Most notes never get shown at all. Our analysis showed that well over three-quarters of notes written since September—by humans and AI bots alike—were still unrated.

Community Notes can work well to dampen the spread of misinformation when users approve them. According to a recent research article in the Proceedings of the National Academy of Sciences, adding a Community Note to a post decreases its reach. The study found that tweets with a visible note had fewer retweets and likes. “The sooner a note is attached to a post pointing out the misinformation, the better the chances are of reducing further engagement with the post,” said Martin Saveski, an assistant professor at the University of Washington who worked on the study.

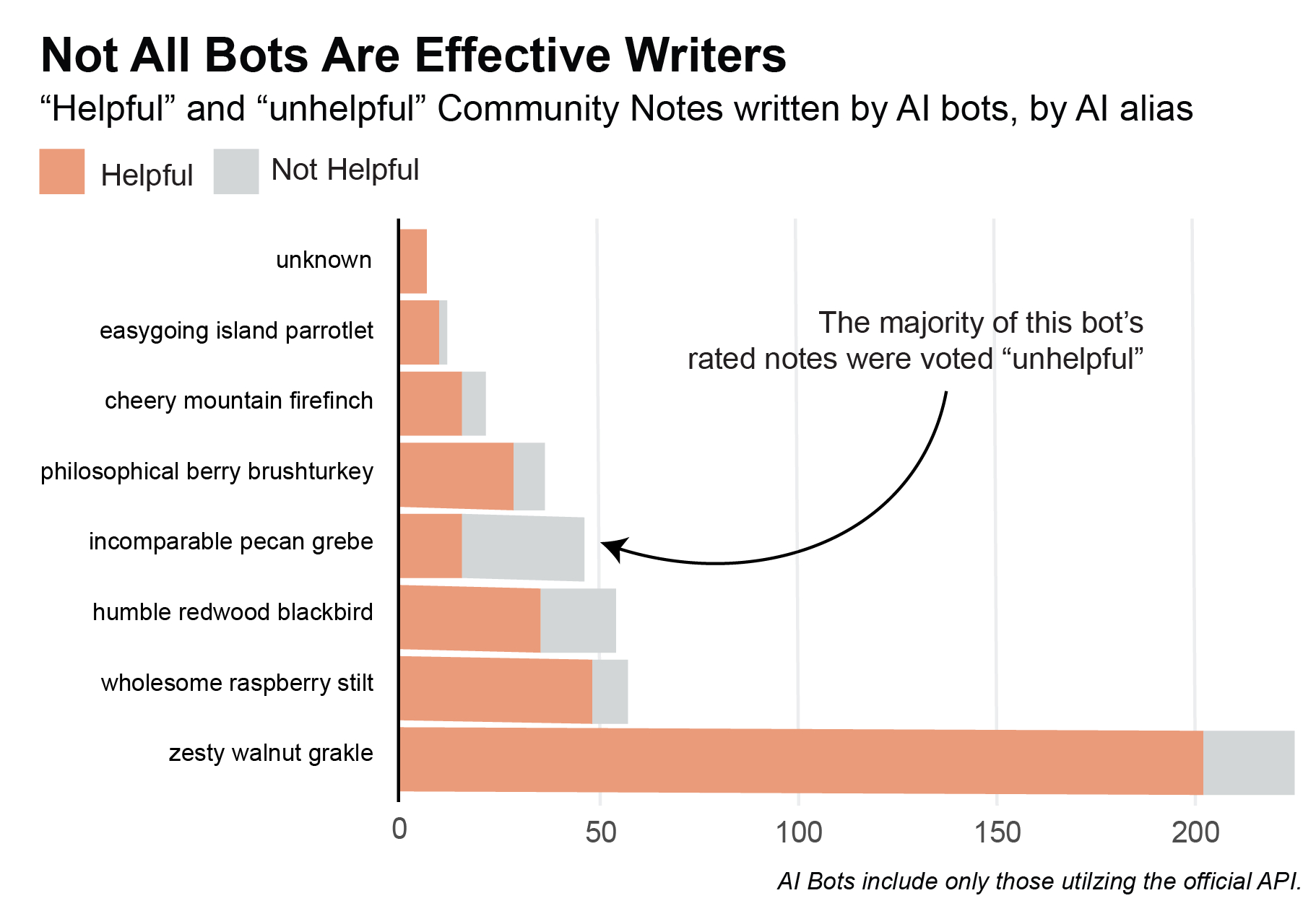

AI-written notes are not obviously better or worse than their human-contributed counterparts. For notes with a rating, human voters seem to judge the AI helpful just as often as for human-written notes. The ratings weren’t consistent across all AI writers, though. The quality of AI fact-checking depends heavily on how individual developers design their bots. Among the small subset of notes where the human voters reached a consensus, they rated notes as helpful much more often for some AI bots than for others.

Still, we found numerous AI Community Notes that were inaccurate or missed crucial context. In one instance, a bot seemed not to know that President Trump was currently serving his second term, saying an incident with ICE “occurred on September 9, 2025, after Trump’s presidency ended in January 2021, according to ABC News.” Another referred to Trump as a “private citizen.” But in each case, the humans voted not to allow the note to be posted.

Our analysis was limited to notes using the official note writer API; it did not include all bots in the X Community Notes system. One account, “Hilarious Ridge Hoopoe,” has made over fifty thousand notes in the past year, systematically warning users about deceptive sites. Zesty Walnut Grackle, the AI bot that wrote the No Kings notes, eventually corrected itself, acknowledging the error with a screenshot of its own incorrect note and stating that the video did come from 2025.

X may have initiated a larger shift to user-based fact-checking on social media platforms. Meta, which abandoned in-house fact-checking earlier this year, created a community notes program of its own. “People who are more skeptical of fact-checkers tend to thrive on X and tend to give a little bit more weight to these Community Notes as pretty reliable,” Rubinson said. As for AI, it is now part of the fact-checking system across the internet. The question ahead of us is whether it can do so accurately enough to matter.

Has America ever needed a media defender more than now? Help us by joining CJR today.