Sign up for the daily CJR newsletter.

This article appeared in the Tow Center for Digital Journalism’s newsletter. Click here to subscribe.

In recent years, a journalistic development known as “automated journalism” or “automated news” has stirred up a lot of interest within newsrooms, with major organizations like the Associated Press, The Washington Post and the BBC adopting this technology.

Automated news is generally understood as the auto-generation of journalistic text through software and algorithms, without any human intervention beyond the initial programming. They are currently being powered by a basic application of Natural Language Generation (NLG), a computer technique in which algorithms fetch information on external or internal datasets to fill the blanks in pre-written content.

As such, their conception partially resembles the word game Mad Libs, as programmers and journalists need to design story “templates” into which data can later be inserted.

Some media organizations outsource the production of automated news to external content providers, while others build them in-house. The BBC opted for a third way, utilizing an online platform, Arria Studio, which lets journalists design their own templates for automated news. This platform uses a type of No-code language that makes it accessible to editorial staff with little to no computing background, even if more complex programming needs to be done outside the tool.

In a recent report for BBC R&D, I followed five of the Corporation’s experiments with automated news that were conducted under the lead of BBC News Labs, the broadcaster’s own incubator in charge of testing out new technologies for media production. These experiments were conducted over the course of just one year, with each iteration gradually becoming more elaborate.

From heath stories to general elections

The first automated news project at the BBC began as a feasibility check at the beginning of 2019. Using Arria Studio, the News Labs team prepared narratives that could connect to data already garnered to run BBC’s health performance tracker. That way, they managed to transform these data into over 100 automated stories every month, so as to inform readers on A&E waiting times in East Anglia trusts.

They also arranged for automated visualizations to be created and displayed alongside each of these automated stories.

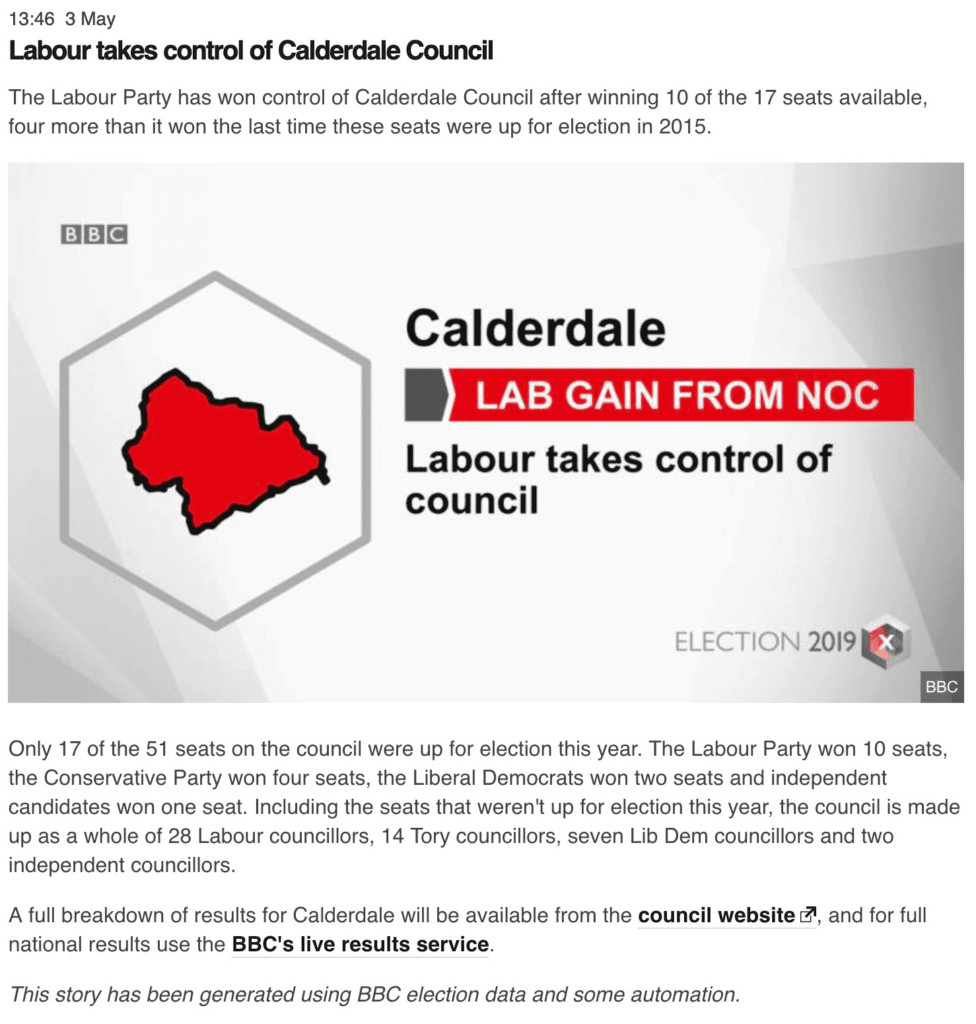

The second project, an iteration of this initial version, took place shortly afterwards, during the United Kingdom’s local elections of May 2019. The team generated a sample of 16 stories to see if the infrastructure they built as part of the A&E waiting times project could, this time, be linked to the BBC’s election results feed, which is manually filled by journalists on the ground.

The team ran out of time to automate visualizations like those used in the A&E waiting time stories, but they demonstrated that the system could indeed be used for election results.

Above: A story generated for Calderdale during the United Kingdom’s local elections of May 2019. (Source: BBC News Labs)

The third experiment conducted by BBC News Labs looked at the use of automated news to produce over 300 localized stories on the rate of tree planting in England, which were generated as a complement to a larger scale data journalism piece published on 30 July 2019.

All the localized stories included a link to the main data journalism piece, which provided a nationwide picture showing that twice as many trees needed to be planted in the United Kingdom in order to meet the country’s carbon emission targets.

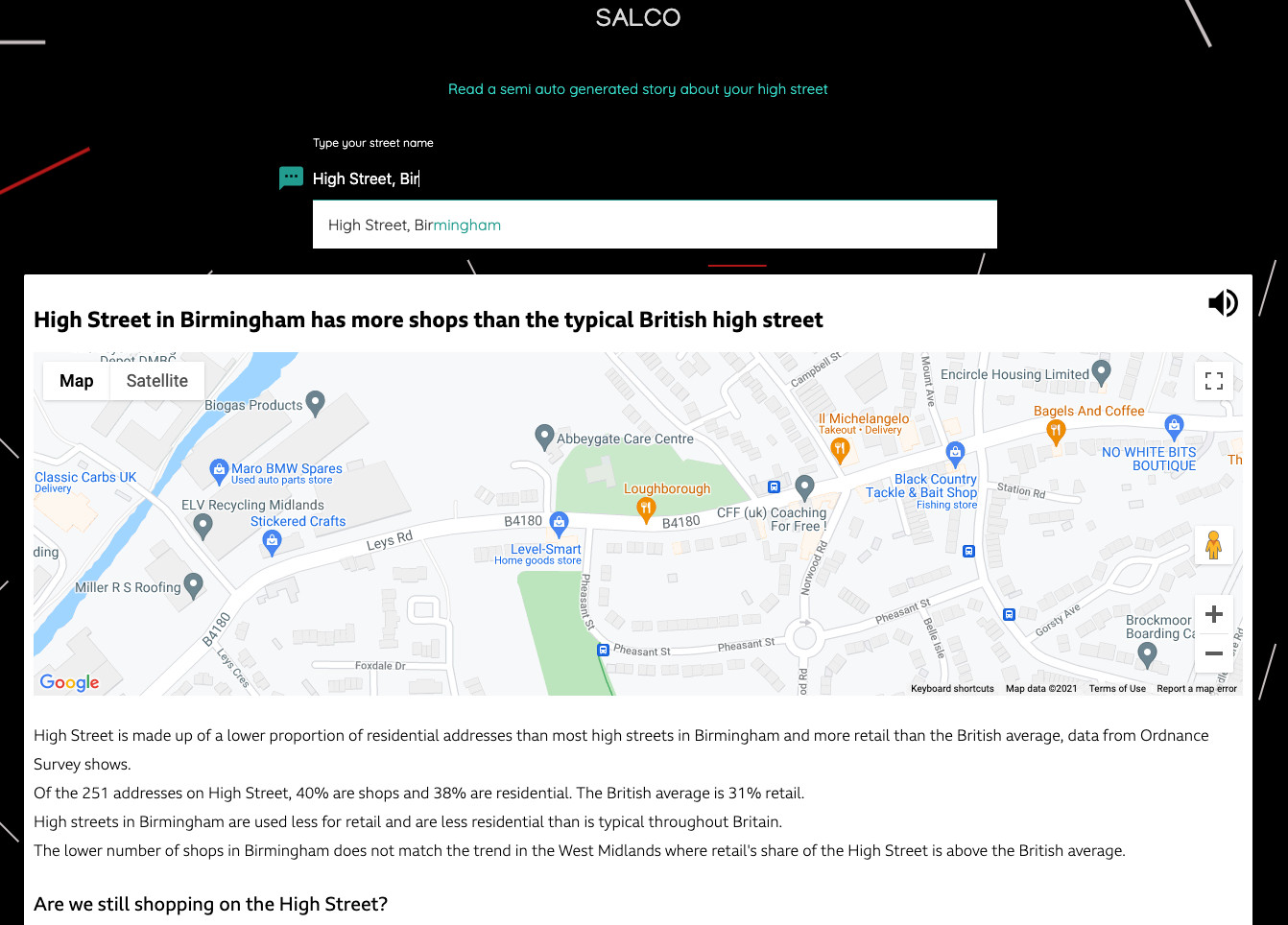

Soon after that, automated news was utilized for the fourth time, in a lookup tool launched in October 2019 that gave access to nearly 7,000 hyperlocal stories trying to capture the extent to which people were still shopping on British high streets, given the popularity of online sales.

To do this, a News Labs journalist with a computing background combined a geolocalized dataset from the Ordnance Survey, which contained retail information about every high street in Great Britain, with employment figures from the Office for National Statistics. The journalist wrote Python scripts to make calculations in order to compare the streets’ numbers with economic data at the regional and national levels.

Above: The lookup tool developed by BBC News Labs, which gave access to close 7,000 hyperlocal stories trying to capture the extent to which people were still shopping on British high streets, for instance here in Birmingham. (Source: BBC News Labs)

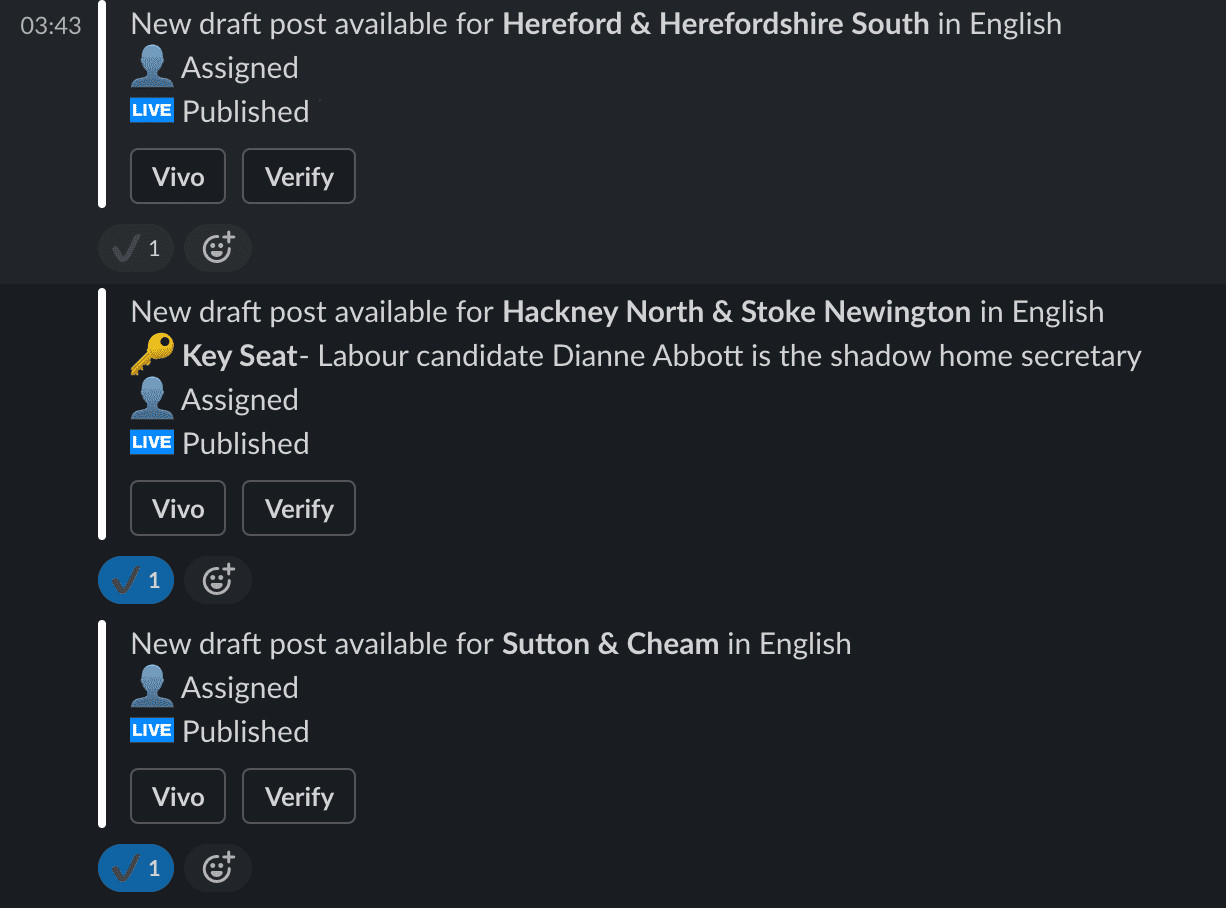

The BBC’s fifth and final experiment with automated news, and by far the most ambitious, was the generation of close to 700 stories to cover the December 2019 general elections in the United Kingdom, which included 40 stories written in Welsh. The News Labs team built on the infrastructure they had developed during the local elections, but this time reporters were directly involved in the process, as they were tasked with checking stories and adding any missing detail before publication.

On the night of the election, each of these journalists had to work with a continuous stream of automated Slack notifications, which informed them when new results were in and included a link to find the corresponding automated story in the BBC’s content management system. From there they could make edits or publish as-is.

The Slack notification also provided them with extra information on constituencies that required further attention, for instance those held by the Prime Minister, the Leader of the Opposition or the Speaker of the House of Commons.

Above: An automated Slack notification informing BBC journalists that new results were in, as well as extra information on constituencies that required further attention, in this instance that of the Shadow Home Secretary. (Source: BBC News Labs)

Structured journalism for baseline scenarios

When working with predictable scenarios the BBC resorted to a form of “structured journalism,” which is best understood as the process of “atomizing the news” so that narratives can be turned into databases.

Structured journalism generally comes together using a “top-down” approach, first by envisioning what the ideal story would look like, and then breaking it down into smaller elements that can be reused across multiple versions of the same story. “If you didn’t have any automation involved, what would be the story you, as a human being, would want to write, or what would be the elements of the story that you would want to write?” said an editor at BBC News Labs. “Having established that, we then looked at what data we could get to fill that.”

To demonstrate this process, a senior journalist with News Labs described searching for all the possible elements that could be thought of in advance, in anticipation of the 2019 general elections in the United Kingdom: “You don’t know in advance who is going to win the national general election, you don’t know who is going to win each seat, but you know all the possibilities in advance,” he said.

These could include, for instance, who could be winning the race, how the margin of victory compared to the previous election, the possibility of a dead heat, whether it could be a gain or a loss for each party, the other candidates’ ranking as well as their chances of losing their deposits.

Human-in-the-loop for edge cases

Aside from using a “structured journalism” approach when delineating baseline scenarios for automated news, the BBC also engaged with a human-in-the-loop configuration when dealing with edge cases. This was most notably the case for the 2019 United Kingdom general election, as there were many peculiarities that were unique to the political context at the time.

A journalist with News Labs explained that the election was called primarily for reasons to do with Brexit. This, he said, resulted in several departures from the norm. These included situations such as when some constituencies that have been Labour for decades were likely to turn Conservative or where candidates changed party or seat, left their party to run as independent, or when legal proceedings were going on.

To identify all these “high risk” cases, the News Labs team collaborated closely with the BBC’s political research unit, which produces briefs and analyses for the news service. “We basically talked through all of these edge cases, which for this election there were like… dozens,” recalled the News Labs journalist.

Above: An automated story generated for the Vauxhall constituency during the 2019 general elections in the UK. It includes details that could be identified in advance, such as the number of votes by which the leading candidate won, and how that margin compares to the previous election, voter turnout and which candidates lost their deposit. (Source: BBC News)

Embedding journalistic norms in code

Automated news at the BBC also involved setting up algorithmic rules, in an attempt to embed editorial standards, journalistic codes of conduct and linguistic aspects into a programmatic form.

When it comes to embedding editorial standards, for example, a product manager at BBC News Labs noticed that the most challenging part had to do with articulating the very specifics of these rules. Whereas these standards are generally well described in journalistic style guides or other associative guidelines, encoding them into a programmatic format requires very detailed instructions.

To work through this process ahead of the 2019 United Kingdom general election, the team at News Labs met with experts at the BBC’s Political Research Unit to determine the rules around using specific language.

Although the actual implementation of automated news on the night of the election went without any noticeable hitches, it highlighted areas where there was room to improve the integration of editorial rules into a programmatic form.

To give one example, a junior software engineer with a journalism background, who contributed to publishing these stories on the night, stressed that she felt the need to correct some of the headlines that were only mentioning a win with over 50% of the votes when, in fact, the winning party secured over 75% of votes. She mentioned being aware of “how complicated election coverage is” and “how frequently the BBC is accused of bias,” which made her cautious about “an under-reporting of the margin of victory to be… taken as bias.”

Future directions

My work with the BBC highlighted the need to reflect on new guidance for journalism practice, which would take all of these computational aspects into account.

For instance, this could translate into a set of “computational journalism guidelines” that would take inspiration from the BBC’s Editorial Standards and Values, but also from the framework that is currently being developed as part of the BBC’s Machine Learning Engine Principles.

This course of action is likely to take the form of a back-and-forth process over the coming years, as journalists and programmers gradually run into issues that are located at the intersection of computer programming and journalism know-how, and sit together so as to determine which rules to apply to them.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 765140, which BBC R&D is part of.

References:

Anderson, C. W. (2018). Apostles of Certainty: Data Journalism and the Politics of Doubt. Oxford, UK: Oxford University Press.

Carlson, M. (2015). The Robotic Reporter. Digital Journalism, 3, 416–431. doi: 10.1080/21670811.2014.976412.

Caswell, D. & Dörr, K. (2018). Automated Journalism 2.0: Event-driven narratives. Journalism Practice, 12, 477–496. doi: 10.1080/17512786.2017.1320773.

Diakopoulos, N. (2019). Automating the News: How Algorithms Are Rewriting the Media. Cambridge, MA: Harvard University Press.

Graefe, A. (2016). Guide to Automated Journalism (Research paper, Tow Center for Digital Journalism). Retrieved from https://academiccommons.columbia.edu/doi/10.7916/D80G3XDJ

Jones, R. & Jones, B. (2019). Atomising the News: The (In)Flexibility of Structured Journalism. Digital Journalism, 7, 1157–1179. doi: 10.1080/21670811.2019.1609372

Has America ever needed a media defender more than now? Help us by joining CJR today.